解决Jumpserver的升级故障

JumpServer在5月27日推出了他们的LTS版本:4.10,然后我就寻思给现有的堡垒机升一下级,然后等到这两天4.10.1版本出来之后就趁晚上有空就升级一下。 然后,它就挂了。 2025.06...

写给小学毕业生的家书

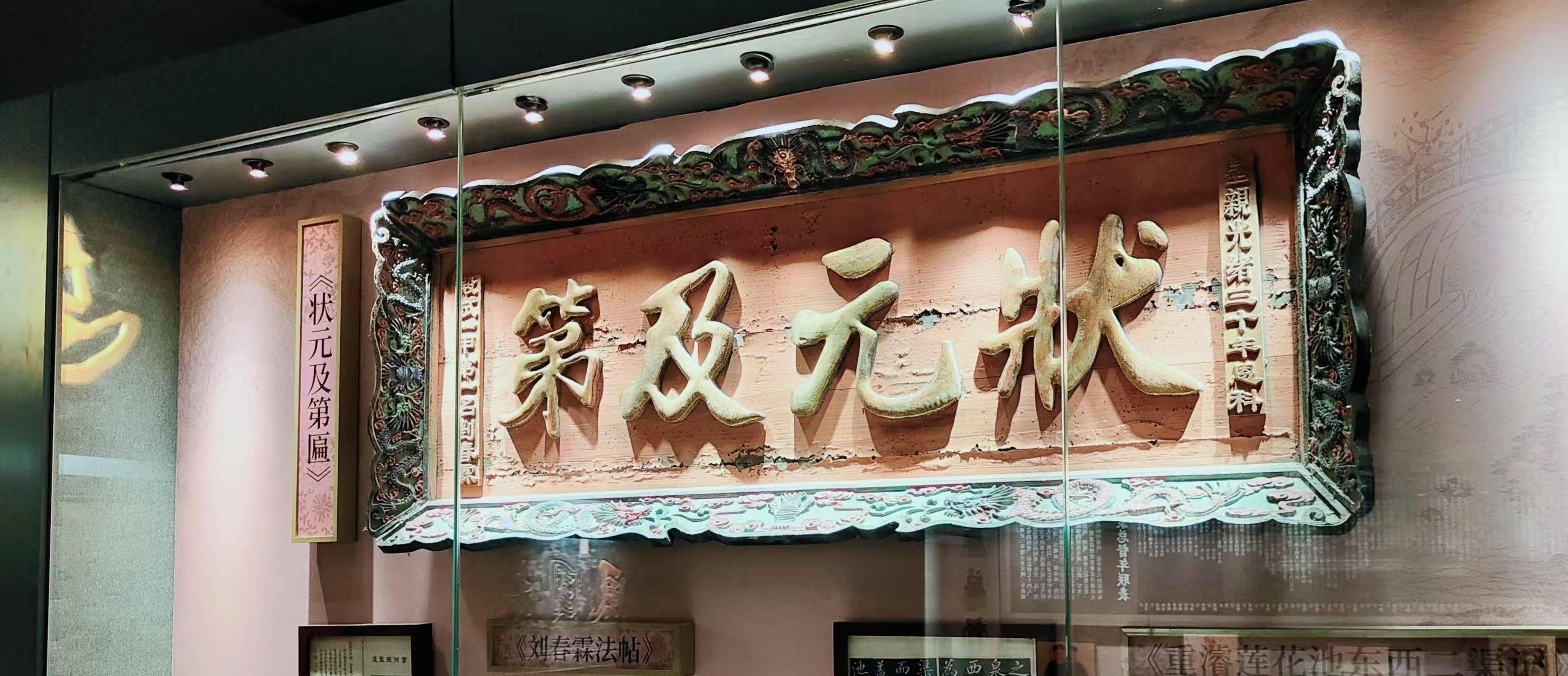

前两天,小朋友的班主任在家长群里让家长偷偷写一封家书给自己的娃,要等放假之前的集体活动中给娃一点小小的“惊喜”。媳妇说这事归你,那我就写点什么吧。 总而言之:我将不能常到百草园了。Ade,我的蟋蟀们!Ade,我的覆盆子们和木莲们! —— 鲁迅·《从百草园到三味书屋》 2025.05 河北·保定·保定博物馆 满清最后一个状元刘春霖的状元及第匾 儿童节快乐,我的小朋友! 今年的六一是个特别的节日,因为这是你在小学阶段的最后一个儿童节。也就是说,很快你就要和小学校园挥挥手,然后骄傲地迈入中学生的行列了。 ...

合同的格式和书写

合同条款是当事人合意的产物、合同内容的表现形式,是确定合同当事人权利义务的根据。 2025.05...

一个图书馆的诞生

TaleBook是开源项目,是一个简洁但强大的私人书籍管理系统,其为基于Calibre和Calibre-WEB项目构建的在线图书馆,具备书籍管理、在线阅读与推送、用户管理、SSO登录、从百度/豆瓣拉取书籍信息等功能。 2025.03 北京·房山·周口店猿人遗址博物馆 安装123456789101112131415# 创建目录mkdir library# 拉取镜像podman pull talebook/talebook# 拉起容器并配置挂载目录podman run -d --name talebook -p 8080:80 -v ~/library:/data talebook/talebook# 生成自启动配置文件podman generate systemd talebook > ~/docker/talebook.service# 放到制定目录里cp docker/talebook.service /etc/systemd/system/# 配置服务自启systemctl daemon-reload systemctl enable --now...

配置Linux主机使用两步验证

配置Linux主机的两步验证登陆是个看起来很简单,实际上很麻烦的一件操作,主要是SSH和PAM配置的注意事项很多,需要在配置时保持一个SSH的连接,以备不时之需。 2025.05 河北·保定·定州·定州博物馆 东汉中山穆王刘畅的银缕玉衣 背景两步验证 什么是两步验证 如何使用两步验证 安全缺陷 ManyTimePad攻击 OTP绕过 OTP机器人 实施现在有一台stand alone的ECS放到公网上访问,由于某些原因用ACL限制SSH登陆有点麻烦。现在就是使用密钥登陆+OTP的形式来实现合规。 另,系统使用Fedora 41。 安装1234# 系统更新dnf -y update # 安装google-authenticator以及在终端生成二维码的工具qrencodednf install -y google-authenticator qrencode...

一个好用的导航面板

Sun-Panel是一个基于NodeJS的、美观、易用的导航面板。它的界面简洁,占用内存小,并且经过这位UP主不断地改进和优化之后,这款导航面板功能也增加了很多,用起来也是非常的顺手。 2025.02 广东·东莞·稻香湖·华为园区 安装123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354# 创建目录mkdir -p ./sunpanel/{conf,uploads,database}# 拉取镜像podman pull hslr/sun-panel:latest# 启动容器podman run -d --restart=always -p 3002:3002 -v ~/sunpanel/conf:/app/conf -v ~/sunpanel/uploads:/app/uploads -v ~/sunpanel/database:/app/database --name homepage...

「置身事内——政府与经济发展」

一套严格的概念框架无疑有助于厘清问题,但也经常让人错把问题当成答案。社会科学总渴望发现一套“放之四海而皆准”的方法和规律,但这种心态需要成熟起来。不要低估经济现实的复杂性,也不要高估科学工具的质量。 2024.08...

NVMe还是NVMe?-你做错了吗?

存储技术发展迅速,NVMe正在抢走聚光灯。但是值得大肆宣传吗?您是否应该抛弃SATA ssd甚至NVMe的hdd?让我们分解一下-这是我对NVMe用例的指南,以及随着时间的推移它对你的钱包是否友好。我们将介绍性能、成本和实际考虑因素,如空间、冷却和电源。 什么是NVMe?NVMe或非易失性存储器Express是为速度而构建的协议。与依赖于为旋转硬盘驱动器设计的旧技术的SATA ssd不同,NVMe使用PCIe接口直接,高带宽连接到CPU。这意味着与SATA的600 mb/s上限相比,数据传输速度更快,约为3,500-7,400 mb/s。这就像从自行车升级到跑车🙂。NVMe还支持多个队列 (最多64,000个),可同时处理大量命令,从而降低存储延迟。这非常适合繁重的工作负载,对吗?每个人都需要这种力量吗? 谁需要NVMe?NVMe在特定场景中闪耀。对于企业来说,它是数据中心、人工智能、机器学习和高频交易的游戏规则改变者,每微秒都很重要。即使是临时用户也会注意到更快的启动时间和文件传输。但是,如果你只是浏览或做轻办公室工作,SATA...

「新媒体舆情监测与管理」

每一个个体都认为自己是独立思考,自己的思想具有独立性以及原创性,所做的选择出自于自我自由意志的选择,但整体所呈现出来的却是大家一致的共同性。 人类经历了口语传播时代、文字传播时代、印刷传播时代、电子传播时代等多个不同的历史发展阶段。随着互联网时代的到来,新的信息的产生、发布和传播模式,带来了舆情的发生、发展的新变革,也使得网络舆情相比以往口口相传的社会舆情具有更加空前的影响力。 2025.03...