Docker的基本使用

|Word Count:9.3k|Reading Time:48mins|Post Views:

简介

Docker是什么

Docker是一个在2013年开源的使用go语言编写的PaaS服务。特点是交付速度快、资源消耗低。Docker采用客户端/服务端架构,使用远程API来管理和创建Docker容器。它的三大理念是build、ship、run,使用Namespace及cggroup等来提供容器的资源隔离与安全保障。

Docker的组成

- Docker主机(Host):物理机或者虚机,用于运行Docker服务进程和容器;

- Docker服务端(Server):Docker守护进程,运行Docker容器;

- Docker客户端(Client):客户端使用docker命令或者其他工具调用相应API;

- Docker仓库(Registroy):保存镜像的仓库,类似git这样的版本控制系统;

- Docker镜像(Images):镜像可以理解为创建实例使用的模板;

- Docker容器(Container):容器是从镜像生成对外提供服务的一个或者一组服务。

NameSpace技术

命名空间保证容器之间的运行环境互相隔离,可以使每个进程看起来都拥有自己的隔离的全局系统资源。

- MNT NameSpace:提供存储隔离能力

- IPC NameSpace:提供进程间通信隔离能力

- UTS NameSpace:提供主机名隔离能力

- PID NameSpace:提供进程隔离能力

- Net NameSpace:提供网络隔离能力

- User NameSpace:提供用户隔离能力

Control Groups

容器的本质就是进程,为了让容器中的进程可控,Docke使用Linux cgroups来限制容器能够使用资源的上限,包括CPU、内存、磁盘、网络带宽等等。此外,还能够对进程进行优先级配置,以及将进程挂起和恢复。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

| # 验证CGROUP

[root@vms101 ~]# cat /boot/config-3.10.0-1160.90.1.el7.x86_64 |grep CGROUP

CONFIG_CGROUPS=y

# CONFIG_CGROUP_DEBUG is not set

CONFIG_CGROUP_FREEZER=y

CONFIG_CGROUP_PIDS=y

CONFIG_CGROUP_DEVICE=y

CONFIG_CGROUP_CPUACCT=y

CONFIG_CGROUP_HUGETLB=y

CONFIG_CGROUP_PERF=y

CONFIG_CGROUP_SCHED=y

CONFIG_BLK_CGROUP=y

# CONFIG_DEBUG_BLK_CGROUP is not set

CONFIG_NETFILTER_XT_MATCH_CGROUP=m

CONFIG_NET_CLS_CGROUP=y

CONFIG_NETPRIO_CGROUP=y

# 查看系统cgroups

[root@vms101 ~]# ll /sys/fs/cgroup/

total 0

drwxr-xr-x. 4 root root 0 May 9 03:40 blkio

lrwxrwxrwx. 1 root root 11 May 9 03:40 cpu -> cpu,cpuacct

lrwxrwxrwx. 1 root root 11 May 9 03:40 cpuacct -> cpu,cpuacct

drwxr-xr-x. 4 root root 0 May 9 03:40 cpu,cpuacct

drwxr-xr-x. 2 root root 0 May 9 03:40 cpuset

drwxr-xr-x. 4 root root 0 May 9 03:40 devices

drwxr-xr-x. 2 root root 0 May 9 03:40 freezer

drwxr-xr-x. 2 root root 0 May 9 03:40 hugetlb

drwxr-xr-x. 4 root root 0 May 9 03:40 memory

lrwxrwxrwx. 1 root root 16 May 9 03:40 net_cls -> net_cls,net_prio

drwxr-xr-x. 2 root root 0 May 9 03:40 net_cls,net_prio

lrwxrwxrwx. 1 root root 16 May 9 03:40 net_prio -> net_cls,net_prio

drwxr-xr-x. 2 root root 0 May 9 03:40 perf_event

drwxr-xr-x. 4 root root 0 May 9 03:40 pids

drwxr-xr-x. 4 root root 0 May 9 03:40 systemd

|

cgroups的具体实现

- blkio:块设备的IO限制

- cpu:使用调度程序为任务提供CPU的访问

- cpu.stat:产生任务的CPU资源报告

- cpuset:多核CPU的调度

- memory:设置任务的内存限制以及产生内存资源报告

- ns:命名空间子系统

- net_cls:标记每个网络包以供cgroups任务使用

核心技术

容器规范

- runtime spec

- image format spec

容器运行时

容器管理工具

- RKT的管理工具是rkt cli

- Runc的管理工具是Docker engine

- LXC|LXD的管理工具就是lxc

容器定义工具

- Docker Image:Docker容器的模板

- DockerFile:包含N个命令的文本文件,通过定义Dockerfile来创建Docker Image

- ACI(App Container Image):与DockerImage类似,是CoreOS开发的rkt容器镜像格式

镜像仓库(Registry)

统一保存镜像而且是多个不同镜像版本的地方,叫做镜像仓库。

- Image Registry:Docker官方提供的私有仓库部署工具

- Docker Hub:Docker官方的公共仓库

- Harbor:VMware提供的带WEB界面自带认证功能的镜像仓库

编排工具

容器编排通常包括容器管理、调度、集群定义和服务发现等功能。

- Docker Swarm:Docker官方开发的容器编排引擎

- Kubernetes:Google领导开发的容器编排引擎,其内部项目为Borg,支持Docker和CoreOS

依赖技术

容器网络

Docker自带网络功能的docker network仅支持管理单机容器网络,多主机运行需要使用第三方开源网络插件,例如calico、flannel等。

服务发现

容器的动态扩容特性决定了容器IP会动态变化,需要一种机制自动识别并将用户请求动态转发到新的容器上。k8s自带服务发现功能,需要结合kube-dns服务解析域名。

容器监控

Docker原生命令Docker ps/top/stats可以查看容器运行状态,也可以使用Promeheus等第三方工具监控容器运行状态。

数据管理

容器的在不同Host之间的动态迁移就需要相关数据也要随之迁移,需要使用解决其数据存储和挂载问题。

日志收集

Docker原生日志查看工具是docker logs,但容器内部日志需要通过ELK等专门日志工具进行收集、分析和展示。

容器安装

安装

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

| # 以CentOS7为例,使用华为源

# 设置默认防火墙配置为信任区域

firewall-cmd --set-default-zone=trusted

# 配置软件安装源

wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

# 执行部署

yum makecache fast

yum update

yum remove -y docker docker-common docker-selinux docker-engine

yum install -y yum-utils device-mapper-persistent-data lvm2 psmisc net-tools

# 安装指定版本

yum install -y docker-ce

# 配置加速源

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://37y8py0j.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl daemon-reload

systemctl restart docker

# 启动

systemctl enable --now docker

# 修改内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system

|

验证服务

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

| # 查看版本信息

[root@vms101 ~]# docker version

Client: Docker Engine - Community

Version: 23.0.5

API version: 1.42

Go version: go1.19.8

Git commit: bc4487a

Built: Wed Apr 26 16:18:56 2023

OS/Arch: linux/amd64

Context: default

Server: Docker Engine - Community

Engine:

Version: 23.0.5

API version: 1.42 (minimum version 1.12)

Go version: go1.19.8

Git commit: 94d3ad6

Built: Wed Apr 26 16:16:35 2023

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.20

GitCommit: 2806fc1057397dbaeefbea0e4e17bddfbd388f38

runc:

Version: 1.1.5

GitCommit: v1.1.5-0-gf19387a

docker-init:

Version: 0.19.0

GitCommit: de40ad0

# 验证Docker0网卡

[root@vms101 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:e6:56:9d:47 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

# 查看系统信息

[root@vms101 ~]# docker info

Client:

Context: default

Debug Mode: false

Plugins:

buildx: Docker Buildx (Docker Inc.)

Version: v0.10.4

Path: /usr/libexec/docker/cli-plugins/docker-buildx

compose: Docker Compose (Docker Inc.)

Version: v2.17.3

Path: /usr/libexec/docker/cli-plugins/docker-compose

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 1

Server Version: 23.0.5

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 1

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 2806fc1057397dbaeefbea0e4e17bddfbd388f38

runc version: v1.1.5-0-gf19387a

init version: de40ad0

Security Options:

seccomp

Profile: builtin

Kernel Version: 3.10.0-1160.90.1.el7.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 3.839GiB

Name: vms101

ID: 589aba66-198e-4805-9ae2-518d17585521

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Experimental: false

Insecure Registries:

127.0.0.0/8

Registry Mirrors:

https://37y8py0j.mirror.aliyuncs.com/

Live Restore Enabled: false

|

服务进程

- dockerd:被Client直接访问,其父进程为Host的Systemd守护进程

- docker-proxy:实现容器通信,其父进程为dockerd

- containerd:被dockerd进程调用以实现与runc交互

- containerd-shim:真正运行容器的载体,其父进程为containerd

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

| # 查看宿主机进程树

[root@vms101 ~]# pstree -p 1

systemd(1)─┬─NetworkManager(881)─┬─{NetworkManager}(886)

│ └─{NetworkManager}(892)

├─containerd(1191)─┬─{containerd}(1208)

│ ├─{containerd}(1212)

│ ├─{containerd}(1213)

│ ├─{containerd}(1214)

│ ├─{containerd}(1242)

│ ├─{containerd}(1253)

│ ├─{containerd}(1254)

│ └─{containerd}(1285)

├─containerd-shim(2030)─┬─nginx(2051)─┬─nginx(2101)

│ │ └─nginx(2102)

│ ├─{containerd-shim}(2031)

│ ├─{containerd-shim}(2032)

│ ├─{containerd-shim}(2033)

│ ├─{containerd-shim}(2034)

│ ├─{containerd-shim}(2035)

│ ├─{containerd-shim}(2036)

│ ├─{containerd-shim}(2037)

│ ├─{containerd-shim}(2038)

│ ├─{containerd-shim}(2039)

│ └─{containerd-shim}(2040)

├─containerd-shim(2139)─┬─nginx(2159)─┬─nginx(2212)

│ │ └─nginx(2213)

│ ├─{containerd-shim}(2141)

│ ├─{containerd-shim}(2142)

│ ├─{containerd-shim}(2143)

│ ├─{containerd-shim}(2144)

│ ├─{containerd-shim}(2145)

│ ├─{containerd-shim}(2146)

│ ├─{containerd-shim}(2147)

│ ├─{containerd-shim}(2153)

│ ├─{containerd-shim}(2179)

│ ├─{containerd-shim}(2180)

│ └─{containerd-shim}(2181)

├─dockerd(1290)─┬─docker-proxy(2011)─┬─{docker-proxy}(2012)

│ │ ├─{docker-proxy}(2013)

│ │ ├─{docker-proxy}(2014)

│ │ ├─{docker-proxy}(2015)

│ │ └─{docker-proxy}(2016)

│ ├─docker-proxy(2017)─┬─{docker-proxy}(2018)

│ │ ├─{docker-proxy}(2019)

│ │ ├─{docker-proxy}(2020)

│ │ ├─{docker-proxy}(2021)

│ │ └─{docker-proxy}(2022)

│ ├─docker-proxy(2121)─┬─{docker-proxy}(2122)

│ │ ├─{docker-proxy}(2123)

│ │ ├─{docker-proxy}(2124)

│ │ ├─{docker-proxy}(2125)

│ │ └─{docker-proxy}(2126)

│ ├─docker-proxy(2127)─┬─{docker-proxy}(2128)

│ │ ├─{docker-proxy}(2129)

│ │ ├─{docker-proxy}(2130)

│ │ ├─{docker-proxy}(2131)

│ │ └─{docker-proxy}(2132)

│ ├─{dockerd}(1373)

│ ├─{dockerd}(1374)

│ ├─{dockerd}(1375)

│ ├─{dockerd}(1376)

│ ├─{dockerd}(1462)

│ ├─{dockerd}(1463)

│ ├─{dockerd}(1464)

│ └─{dockerd}(1868)

|

容器的创建与管理

通信流程

- dockerd通过grpc和containerd模块通信,dockerd由libcontainerd负责和contaiinerd进行交换,socket文件为:/run/containerd/containerd.sock;

- containerd在dockerd启动时被启动,然后containerd启动grpc请求监听,containerd处理grpc请求;

- 若是start或者exec容器,containerd拉起一个container-shim,并进行相关操作;

- container-shim拉起后,start/exec/create拉起runC进程,通过exit、control文件和containerd通信,通过父子进程关系和SIGCHLD监控容器中的进程状态;

- 在整个容器生命周期中,containerd通过epoll监控容器文件,监控容器事件。

镜像管理

Docker镜像包含有启动容器所需要的文件系统及所需要的内容,因此镜像主要用于创建并启动Docker容器。Docker镜像包含多层文件系统,称为UnionFS联合文件系统,它将多层目录挂载到一起,形成一个虚拟文件系统,每一层叫做一层layer,每层文件系统有只读(readonly)、读写(readwrite)和写出(whiteout-able)三种权限,但每层文件系统都是只读的。构建镜像的时候,每个构建的操作都增加一层文件系统,一层层往上叠加,上层的修改会覆盖底层的可见性。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

| # 搜索镜像

# 按最新版搜索

[root@vms101 ~]# docker search centos

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

centos DEPRECATED; The official build of CentOS. 7575 [OK]

kasmweb/centos-7-desktop CentOS 7 desktop for Kasm Workspaces 36

bitnami/centos-base-buildpack Centos base compilation image 0 [OK]

# 指定版本搜索

[root@vms101 ~]# docker search centos:7.2

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

vikingco/python Python Stack Docker Base Image: Based on cen… 1

alvintz/centos centos:7.2.1511 0 [OK]

morrowind/centos From centos:7.2 0

# 下载镜像

[root@vms101 ~]# docker pull alpine

Using default tag: latest

latest: Pulling from library/alpine

59bf1c3509f3: Pull complete

Digest: sha256:21a3deaa0d32a8057914f36584b5288d2e5ecc984380bc0118285c70fa8c9300

Status: Downloaded newer image for alpine:latest

docker.io/library/alpine:latest

# 查看本地镜像

[root@vms101 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 605c77e624dd 16 months ago 141MB

alpine latest c059bfaa849c 17 months ago 5.59MB

centos latest 5d0da3dc9764 20 months ago 231MB

# 镜像导出

[root@vms101 ~]# docker save centos -o /opt/centos.tar.gz

[root@vms101 ~]# ll /opt/centos.tar.gz

-rw-------. 1 root root 238581248 May 9 04:26 /opt/centos.tar.gz

[root@vms101 ~]# tar xvf /opt/centos.tar.gz

079bc5e75545bf45253ab44ce73fbd51d96fa52ee799031e60b65a82e89df662/

079bc5e75545bf45253ab44ce73fbd51d96fa52ee799031e60b65a82e89df662/VERSION

079bc5e75545bf45253ab44ce73fbd51d96fa52ee799031e60b65a82e89df662/json

079bc5e75545bf45253ab44ce73fbd51d96fa52ee799031e60b65a82e89df662/layer.tar

5d0da3dc976460b72c77d94c8a1ad043720b0416bfc16c52c45d4847e53fadb6.json

manifest.json

tar: manifest.json: implausibly old time stamp 1970-01-01 08:00:00

repositories

tar: repositories: implausibly old time stamp 1970-01-01 08:00:00

# 镜像删除

[root@vms101 ~]# docker rmi centos

Untagged: centos:latest

Untagged: centos@sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177

Deleted: sha256:5d0da3dc976460b72c77d94c8a1ad043720b0416bfc16c52c45d4847e53fadb6

Deleted: sha256:74ddd0ec08fa43d09f32636ba91a0a3053b02cb4627c35051aff89f853606b59

# 镜像导入

[root@vms101 ~]# docker load < /opt/centos.tar.gz

74ddd0ec08fa: Loading layer [==================================================>] 238.6MB/238.6MB

Loaded image: centos:latest

[root@vms101 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 605c77e624dd 16 months ago 141MB

alpine latest c059bfaa849c 17 months ago 5.59MB

centos latest 5d0da3dc9764 20 months ago 231MB

# 镜像的重新打标签

[root@vms101 nginx]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

sujx/nginx-yum-file v1 97d106d963e7 9 minutes ago 2.42GB

centos latest 5d0da3dc9764 20 months ago 231MB

[root@vms101 nginx]# docker tag centos

centos:7.9.2009 centos:latest centos-nginx:v1

[root@vms101 nginx]# docker tag centos:latest centos8

[root@vms101 nginx]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos8 latest 5d0da3dc9764 20 months ago 231MB

centos latest 5d0da3dc9764 20 months ago 231MB

# 查看镜像的文件层次

[root@vms101 nginx]# docker history centos8

IMAGE CREATED CREATED BY SIZE COMMENT

5d0da3dc9764 20 months ago /bin/sh -c #(nop) CMD ["/bin/bash"] 0B

<missing> 20 months ago /bin/sh -c #(nop) LABEL org.label-schema.sc… 0B

<missing> 20 months ago /bin/sh -c #(nop) ADD file:805cb5e15fb6e0bb0… 231MB

|

容器管理

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

| # 显示所有容器

[root@vms101 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6a36e33a02f0 nginx "/docker-entrypoint.…" About an hour ago Up About an hour 0.0.0.0:83->80/tcp, :::83->80/tcp ngingx-3

1353d6b1c4f2 nginx "/docker-entrypoint.…" About an hour ago Up About an hour 0.0.0.0:82->80/tcp, :::82->80/tcp ngingx-2

# 从镜像启动一个容器

[root@vms101 ~]# docker run -it centos bash

[root@c82b8168f173 /]#

# 使用crtl+p+q推出容器而不注销

[root@vms101 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c82b8168f173 centos "bash" 18 seconds ago Up 17 seconds eager_nash

6a36e33a02f0 nginx "/docker-entrypoint.…" About an hour ago Up About an hour 0.0.0.0:83->80/tcp, :::83->80/tcp ngingx-3

1353d6b1c4f2 nginx "/docker-entrypoint.…" About an hour ago Up About an hour 0.0.0.0:82->80/tcp, :::82->80/tcp ngingx-2

# 进入容器

[root@vms101 ~]# docker exec -it c82b8 bash

[root@c82b8168f173 /]# top

top - 21:28:03 up 1:47, 0 users, load average: 0.00, 0.01, 0.05

Tasks: 3 total, 1 running, 2 sleeping, 0 stopped, 0 zombie

%Cpu(s): 0.0 us, 0.0 sy, 0.0 ni,100.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

MiB Mem : 3931.1 total, 2082.2 free, 476.3 used, 1372.5 buff/cache

MiB Swap: 2048.0 total, 2048.0 free, 0.0 used. 3199.2 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1 root 20 0 12036 2088 1572 S 0.0 0.1 0:00.03 bash

21 root 20 0 12036 2088 1572 S 0.0 0.1 0:00.02 bash 35 root 20 0 49112 2164 1520 R 0.0 0.1 0:00.00 top

# 删除正在运行的容器

[root@vms101 ~]# docker rm c82b

Error response from daemon: You cannot remove a running container c82b8168f173d8d4f0365c8d9672e99db68fd25f224a251b8ac31ef54996b5a1. Stop the container before attempting removal or force remove

[root@vms101 ~]# docker rm -f c82b

c82b

# 设定容器别名并指定端口、后台运行

[root@vms101 ~]# docker run -d --name nginx-1 -p 81:80 nginx

b5b0c251e4e7458e1022722d6b19d287caba93b307636c54a60a0a28e7f4e30c

[root@vms101 ~]# docker run -d --name nginx-2 -p 82:80 nginx

[root@vms101 ~]# docker run -d --name nginx-3 -p 83:80 nginx

[root@vms101 ~]# netstat -tlnp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:81 0.0.0.0:* LISTEN 3859/docker-proxy

tcp 0 0 0.0.0.0:82 0.0.0.0:* LISTEN 2011/docker-proxy

tcp 0 0 0.0.0.0:83 0.0.0.0:* LISTEN 2121/docker-proxy

tcp6 0 0 :::81 :::* LISTEN 3865/docker-proxy

tcp6 0 0 :::82 :::* LISTEN 2017/docker-proxy

tcp6 0 0 :::83 :::* LISTEN 2127/docker-proxy

# 查看日志

[root@vms101 ~]# docker logs nginx-1

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2023/05/08 21:32:14 [notice] 1#1: using the "epoll" event method

2023/05/08 21:32:14 [notice] 1#1: nginx/1.21.5

2023/05/08 21:32:14 [notice] 1#1: built by gcc 10.2.1 20210110 (Debian 10.2.1-6)

2023/05/08 21:32:14 [notice] 1#1: OS: Linux 3.10.0-1160.90.1.el7.x86_64

2023/05/08 21:32:14 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2023/05/08 21:32:14 [notice] 1#1: start worker processes

2023/05/08 21:32:14 [notice] 1#1: start worker process 31

2023/05/08 21:32:14 [notice] 1#1: start worker process 32

172.16.10.1 - - [08/May/2023:21:34:49 +0000] "GET / HTTP/1.1" 200 615 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.0.0 Safari/537.36 Edg/113.0.1774.35" "-"

2023/05/08 21:34:49 [error] 31#31: *2 open() "/usr/share/nginx/html/favicon.ico" failed (2: No such file or directory), client: 172.16.10.1, server: localhost, request: "GET /favicon.ico HTTP/1.1", host: "172.16.10.101:81", referrer: "http://172.16.10.101:81/"

172.16.10.1 - - [08/May/2023:21:34:49 +0000] "GET /favicon.ico HTTP/1.1" 404 555 "http://172.16.10.101:81/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.0.0 Safari/537.36 Edg/113.0.1774.35" "-"

# 查看容器映射端口

[root@vms101 ~]# docker port nginx-1

80/tcp -> 0.0.0.0:81

80/tcp -> [::]:81

# 传递运行命令,以保持容器的运行

[root@vms101 ~]# docker run -d centos /usr/bin/tail -f '/etc/hosts'

d43c0e0a418819c0638fb63831e0e7becfb2d9ceed408721b98e8b3013bfaa3a

[root@vms101 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d43c0e0a4188 centos "/usr/bin/tail -f /e…" 24 seconds ago Up 23 seconds pedantic_mcclintock

# 容器的停止和运行以及重启

[root@vms101 ~]# docker stop nginx-1

nginx-1

[root@vms101 ~]# docker start nginx-1

nginx-1

[root@vms101 ~]# docker restart nginx-1

nginx-1

[root@vms101 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b5b0c251e4e7 nginx "/docker-entrypoint.…" 9 minutes ago Up 9 seconds 0.0.0.0:81->80/tcp, :::81->80/tcp nginx-1

# 使用inspect获取容器相关值

# 获取IP地址

[root@vms101 ~]# docker inspect -f "{{.NetworkSettings.IPAddress}}" nginx-1

172.17.0.4

# 获取容器PID

[root@vms101 ~]# docker inspect -f "{{.State.Pid}}" nginx-1

4216

#使用nsenter命令进入容器

[root@vms101 ~]# yum install -y util-linux

[root@vms101 ~]# nsenter -t 4216 -m -u -i -n -p

root@b5b0c251e4e7:/#

# 批量关闭正在运行的容器

[root@vms101 ~]# docker stop $(docker ps -a -q)

d43c0e0a4188

b5b0c251e4e7

6a36e33a02f0

1353d6b1c4f2

# 批量强制删除已退出容器

[root@vms101 ~]# docker rm -f `docker ps -aq -f status=exited`

d43c0e0a4188

b5b0c251e4e7

6a36e33a02f0

1353d6b1c4f2

# 执行容器的DNS地址

[root@vms101 ~]# docker run -it --rm centos bash

[root@a440c4ada42f /]# cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 223.5.5.5

[root@vms101 ~]# docker run -it --rm --dns 114.114.114.114 centos bash

[root@14689e61b122 /]# cat /etc/resolv.conf

nameserver 114.114.114.114

# 配置随Host拉起镜像 --restart=always

[root@vms101 nginx]# docker run -d --restart=always --name AT-nginx -p 8080:80 nginx

2223df5da188178b7f53043f32387e2ad5c6813b63cfb6030a48960e7d340857

|

镜像与制作

手动制作YUM版Nginx镜像

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

| # 下载CentOS7.9.2009版本镜像

[root@vms101 ~]# docker pull centos:7.9.2009

[root@vms101 ~]# docker run -it centos:7.0.2009 /bin/bash

# 进入容器

[root@76ea6d768009 /]# yum makecache

[root@76ea6d768009 /]# yum install -y wget

[root@76ea6d768009 /]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo

[root@76ea6d768009 /]# yum install -y epel-release

[root@76ea6d768009 /]# sed -i "s/#baseurl/baseurl/g" /etc/yum.repos.d/epel.repo

[root@76ea6d768009 /]# sed -i "s/metalink/#metalink/g" /etc/yum.repos.d/epel.repo

[root@76ea6d768009 /]# sed -i "s@https\?://download.fedoraproject.org/pub@https://repo.huaweicloud.com@g" /etc/yum.repos.d/epel.repo

[root@76ea6d768009 /]# yum makecache

[root@76ea6d768009 /]# yum update -y

[root@76ea6d768009 /]# yum install -y vim wget pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

[root@76ea6d768009 /]# yum install -y nginx

# 修改Nginx配置

[root@76ea6d768009 /]# rm -f /usr/share/nginx/html/index.html

[root@76ea6d768009 /]# echo "Docker YUM Nginx" >> /usr/share/nginx/html/index.html

[root@76ea6d768009 /]# echo "daemon off;" >> /etc/nginx/nginx.conf

# 提交为镜像,-a 作者信息 -m

[root@vms101 ~]# docker commit -a "sujx@live.cn" -m "nginx yum v1" --change="EXPOSE 80 443" 76ea centos-nginx:v1

sha256:10761eaac11cef2e72957c75e922b9d7a29bf481a4723664f9dc7caa54d4f132

# 查看新生成的镜像

[root@vms101 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos-nginx v1 10761eaac11c About a minute ago 1.04GB

nginx latest 605c77e624dd 16 months ago 141MB

alpine latest c059bfaa849c 17 months ago 5.59MB

centos 7.9.2009 eeb6ee3f44bd 20 months ago 204MB

centos latest 5d0da3dc9764 20 months ago 231MB

# 运行新生成的容器

[root@vms101 ~]# docker run -d -p 80:80 --name my-centos-nginx centos-nginx:v1 /usr/sbin/nginx

4df01551e3778be4be619b5a0fe644e404fc92a527622f4bdeb7cad9005278f8

# 测试

[root@vms101 ~]# curl 127.0.0.1

Docker YUM Nginx

|

DockerFile制作Nginx镜像

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| # 创建文件目录

[root@vms101 ~]# cd /opt

[root@vms101 opt]# mkdir -pv dockerfile/{web/{nginx,tomcat,apache},system/{centos,ubuntu,redhat}}

mkdir: created directory ‘dockerfile’

mkdir: created directory ‘dockerfile/web’

mkdir: created directory ‘dockerfile/web/nginx’

mkdir: created directory ‘dockerfile/web/tomcat’

mkdir: created directory ‘dockerfile/web/apache’

mkdir: created directory ‘dockerfile/system’

mkdir: created directory ‘dockerfile/system/centos’

mkdir: created directory ‘dockerfile/system/ubuntu’

mkdir: created directory ‘dockerfile/system/redhat’

[root@vms101 opt]# cd dockerfile/web/nginx/

[root@vms101 nginx]# touch ./Dockerfile

[root@vms101 nginx]# cat > Dockerfile<<EOF

#My Dockerfile

FROM centos:7.9.2009

MAINTAINER sujingxuan "sujx@live.cn"

RUN yum makecache && yum install -y wget && rm -f /var/yum.repos.d/* && wget -O /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo &&yum install -y epel-release && sed -i "s/#baseurl/baseurl/g" /etc/yum.repos.d/epel.repo && sed -i "s/metalink/#metalink/g" /etc/yum.repos.d/epel.repo && sed -i "s@https\?://download.fedoraproject.org/pub@https://repo.huaweicloud.com@g" /etc/yum.repos.d/epel.repo &&yum makecache

RUN yum install -y vim wget pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop nginx

RUN rm -f /usr/share/nginx/html/index.html && RUN echo "Docker YUM Nginx" >> /usr/share/nginx/html/index.html

EXPOSE 80 443

CMD ["nginx","-g","daemon off;"]

EOF

[root@vms101 nginx]# docker build -t sujx/nginx-yum-file:v1 /opt/dockerfile/web/nginx

root@vms101 nginx]# docker build -t sujx/nginx-yum-file:v1 ./

[+] Building 160.1s (7/7) FINISHED ………………

[root@vms101 nginx]# docker run -d --name nginx-dfile -p 80:80 sujx/nginx-yum-file:v1

7b1f4629ff4a451d95c0a5284a06633bb6ae4c66a27ffb28433563045bde2a51

[root@vms101 nginx]# curl 127.0.0.1

Docker YUM Nginx

|

自定义Tomcat镜像

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

| # 基于CentOS 7.9.2009基础镜像构建JDK和Tomcat镜像。

# 下载基础镜像

[root@vms101 ~]# docker pull centos:7.9.2009

# 构建JDK镜像

[root@vms101 ~]# cd /opt/dockerfile/web/jdk

[root@vms101 jdk]# wget https://repo.huaweicloud.com/java/jdk/8u202-b08/jdk-8u202-linux-x64.rpm

[root@vms101 jdk]# cat ./Dockerfile

# JDK Base Image

FROM centos:7.9.2009

MAINTAINER sujingxuan "sujx@live.cn"

COPY jdk-8u202-linux-x64.rpm /tmp

RUN rpm -Uvh /tmp/jdk-8u202-linux-x64.rpm

ADD profile /etc/profile

ENV export JAVA_HOME=/usr/java/default

ENV export PATH=$PATH:$JAVA_HOME/bin

ENV export CLASSPATH=.:$JAVA_HOME/jre/lib:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

RUN rm -f /etc/localtime && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo "Asia/Shanghai" > /etc/timezone && rm -f /tmp/*.rpm

[root@vms101 jdk]# docker build -t centos-jdk:v1 ./

[+] Building 11.0s (9/9) FINISHED

=> [internal] load .dockerignore => => naming to docker.io/library/centos-jdk:v1

# 验证结果

[root@vms101 ~]# docker run -it --rm --name jdk-test centos-jdk:v1 /bin/bash

[root@4115a47a2c49 /]# java -showversion

java version "1.8.0_202"

Java(TM) SE Runtime Environment (build 1.8.0_202-b08)

Java HotSpot(TM) 64-Bit Server VM (build 25.202-b08, mixed mode)

[root@4115a47a2c49 /]# vi day.java

import java.util.Calendar;

class day {

public static void main(String[] args) {

Calendar cal = Calendar.getInstance();

int year = cal.get(Calendar.YEAR);

int month = cal.get(Calendar.MONTH) + 1;

int day = cal.get(Calendar.DATE);

int hour = cal.get(Calendar.HOUR_OF_DAY);

int minute = cal.get(Calendar.MINUTE);

System.out.println(year + "/" + month + "/" + day + " " + hour + ":" + minute);

}

}

[root@4115a47a2c49 /]# javac day.java

[root@4115a47a2c49 /]# java day

2023/5/10 23:56

# 构建Tomcat Base镜像

# CentOS7 JDK For Tomcat

[root@vms101 ~]# cd /opt/dockerfile/web/tomcat

[root@vms101 tomcat]# wget https://dlcdn.apache.org/tomcat/tomcat-8/v8.5.88/bin/apache-tomcat-8.5.88.tar.gz

[root@vms101 tomcat]# vim Dockerfile

FROM centos-jdk:v1

MAINTAINER sujingxuan "sujx@live.cn"

ADD apache-tomcat-8.5.88.tar.gz /usr/local/src

RUN useradd -M -d /usr/local/src/apache-tomcat-8.5.88 tomcat

RUN chown -R tomcat:tomcat /usr/local/src/apache-tomcat-8.5.88

ENV TOMCAT_MAJOR_VERSION 8

ENV TOMCAT_MINOR_VERSION 8.5.88

ENV export CATALINA_HOME=/usr/local/src/apache-tomcat-8.5.88

ENV APP_DIR $(CATALINA_HOME)/webapps

[root@vms101 tomcat]# docker build -t tomcat-base:v1 .

[root@vms101 tomcat]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

tomcat-base v1 38e080bd23d4 3 minutes ago 815MB

centos-jdk v1 ae6b4f8f0c29 48 minutes ago 785MB

# 构建业务镜像

[root@vms101 tomcat-app1]# vim run_tomcat.sh

#!/bin/bash

su - tomcat -c "/usr/local/src/apache-tomcat-8.5.88/bin/startup.sh start"

tail -f /etc/hosts

[root@vms101 tomcat-app1]# mkdir myapp && echo "Tomcat Web Page1">>myapp/index.html

[root@vms101 tomcat-app1]# vim Dockerfile

# Tomcat WEB APPS

FROM tomcat-base:v1

MAINTAINER sujingxuan "sujx@live.cn"

COPY run_tomcat.sh /usr/local/src/apache-tomcat-8.5.88/

COPY myapp/* /usr/local/src/apache-tomcat-8.5.88/webapps/myapp/

RUN chown -R tomcat:tomcat /usr/local/src/apache-tomcat-8.5.88/

CMD ["/usr/local/src/apache-tomcat-8.5.88/run_tomcat.sh"]

EXPOSE 8080 8005

[root@vms101 tomcat-app1]# docker build -t tomcat-app1:v1 .

[root@vms101 ~]# docker run -it -d -p 80:8080 tomcat-app1:v1

# 验证

[root@vms101 ~]# curl http://172.16.10.101/myapp/

Tomcat Web Page1

# 依据上述操作,准备tomcat-app2镜像

[root@vms101 ~]# docker run -it -d -p 81:8080 tomcat-app1:v1

246cdabbb8ed87ede039306870d4c61dddd27c50b3857b53b0d09efd79046f15

[root@vms101 ~]# docker run -it -d -p 82:8080 tomcat-app2:v1

22a0c67911dac716df2aaf4b24b5927bcd30f590e3b858f6d8bac5f329943fc2

[root@vms101 ~]# curl 127.0.0.1:81/myapp/

Tomcat Web Page1

[root@vms101 ~]# curl 127.0.0.1:82/myapp/

Tomcat Web Page2

|

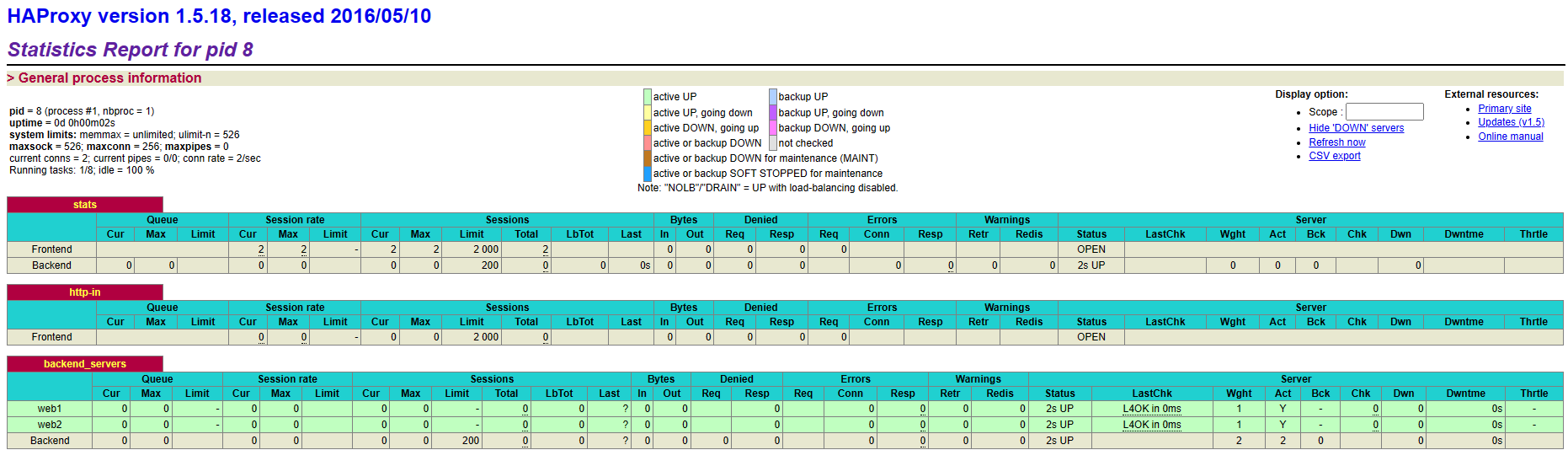

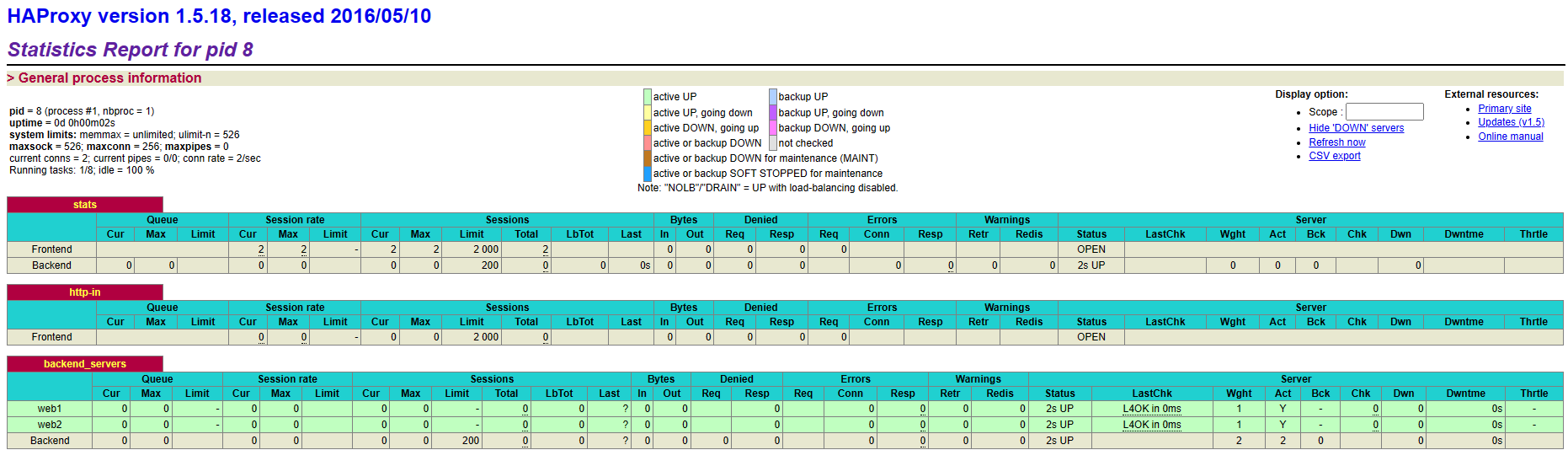

构建Haproxy镜像

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

| # 创建Haproxy启动脚本

[root@vms101 ~]# mkdir /opt/dockerfile/haproxy/ && cd /opt/dockerfile/haproxy/ && touch Dockerfile run_haproxy.sh haproxy.cfg

[root@vms101 haproxy]# cat run_haproxy.sh

#!/bin/bash

haproxy -f /etc/haproxy/haproxy.cfg

/bin/bash

# 创建Haproxy配置文件

[root@vms101 haproxy]# cat haproxy.cfg

global

log 127.0.0.1 local2 info

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 256

user haproxy

group haproxy

daemon

defaults

mode http

log global

option httplog

timeout connect 10s

timeout client 30s

timeout server 30s

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:qwe123!!

frontend http-in

bind *:80

default_backend backend_servers

option forwardfor

backend backend_servers

mode http

balance roundrobin

server web1 172.16.10.101:81 check inter 3000 fall 2 rise 5

server web2 172.16.10.101:82 check inter 3000 fall 2 rise 5

# 创建Haproxy镜像

[root@vms101 haproxy]# cat Dockerfile

#Haproxy Base Image

FROM centos:7.9.2009

MAINTAINER sujingxuan "sujx@live.cn"

RUN yum install -y haproxy

RUN mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.org

COPY haproxy.cfg /etc/haproxy/

COPY run_haproxy.sh /usr/bin/

EXPOSE 80 9999

CMD ["/usr/bin/run_haproxy.sh"]

[root@vms101 haproxy]# docker build -t haproxy-base:v1 .

[+] Building 0.1s (10/10) FINISHED

=> => naming to docker.io/library/haproxy-base:v1

# 拉起前端容器

[root@vms101 ~]# docker run -itd --name haproxy -p 80:80 -p 9999:9999 haproxy-base:v1

91dfb0cb8d8fbf1c81b8804819eba4a308c57209da84ba526cb1bf15be1de2e6

[root@vms101 ~]# docker ps

91dfb0cb8d8f haproxy-base:v1 0.0.0.0:80->80/tcp, :::80->80/tcp, 0.0.0.0:9999->9999/tcp, :::9999->9999/tcp haproxy

5ec41ac5f902 tomcat-app1:v1 8005/tcp, 0.0.0.0:81->8080/tcp, :::81->8080/tcp

d903c1419c9b tomcat-app2:v1 8005/tcp, 0.0.0.0:82->8080/tcp, :::82->8080/tcp

# 验证结果

[root@vms101 ~]# curl 127.0.0.1/myapp/

Tomcat Web Page2

[root@vms101 ~]# curl 127.0.0.1/myapp/

Tomcat Web Page1

[root@vms101 ~]# curl 127.0.0.1/myapp/

Tomcat Web Page2

[root@vms101 ~]# curl 127.0.0.1/myapp/

Tomcat Web Page1

|

数据管理

数据类型

Docker镜像是分层设计,底层只读,通过镜像添加可读写文件系统,用户写入的数据则保存在此层中。

容器的数据分层目录:

- LowerDir: image 镜像层,即镜像本身,只读

- UpperDir: 容器的上层,可读写 ,容器变化的数据存放在此处

- MergedDir: 容器的文件系统,使用Union FS(联合文件系统)将lowerdir 和 upperdir 合并完成后给容器使用,最终呈现给用户的统一视图

- WorkDir: 容器在宿主机的工作目录,挂载后内容会被清空,且在使用过程中其内容用户不可见

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

| # 拉起一个容器

[root@vms101 ~]# docker run -it -d centos:7.9.2009 /bin/bash

c8dcc79d12a987f4e37c4e9747f37bfe9b4c9ae7eb3a41212ae38b58a0fc0ff4

# 查看容器配置信息

[root@vms101 ~]# docker inspect c8dcc

……

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/1e520f13bb5e4cc2ba6776c164c59777f35d00ed7a489e061deeec140293c351-init/diff:/var/lib/docker/overlay2/e8b3f1e50e7ff6272e00ddf3aba286ea0729d65b54c57542970fc180fd350bc3/diff",

"MergedDir": "/var/lib/docker/overlay2/1e520f13bb5e4cc2ba6776c164c59777f35d00ed7a489e061deeec140293c351/merged",

"UpperDir": "/var/lib/docker/overlay2/1e520f13bb5e4cc2ba6776c164c59777f35d00ed7a489e061deeec140293c351/diff",

"WorkDir": "/var/lib/docker/overlay2/1e520f13bb5e4cc2ba6776c164c59777f35d00ed7a489e061deeec140293c351/work"

},

"Name": "overlay2"

},

"Mounts": [],

……

# 在容器中创建文件

[root@vms101 ~]# docker exec -it c8dcc /bin/bash

[root@c8dcc79d12a9 /]# dd if=/dev/zero of=file bs=1M count=100

100+0 records in

100+0 records out

104857600 bytes (105 MB) copied, 0.276637 s, 379 MB/s

[root@c8dcc79d12a9 /]# md5sum file

2f282b84e7e608d5852449ed940bfc51 file

[root@c8dcc79d12a9 /]# cp anaconda-post.log /opt/anaconda-post.log

[root@c8dcc79d12a9 /]# exit

exit

# 在Host中查看容器创建的文件

[root@vms101 ~]# tree /var/lib/docker/overlay2/c

c18937983445d3e2d6c11c5bb3ce20a336f9024111c529bd7a99008c27bcf096/ c595c60cf63d162ae9493e112498b941cf8d40a6b7f3bdeb73fa39609383c1e4/ cxn3dw459jii5d9r5iseys6zx/

[root@vms101 ~]# tree /var/lib/docker/overlay2/l

l/ lmv5guk13yscu2hburzkmzyl7/ lwp6qtbel95861tstj3q5tqu5/

[root@vms101 ~]# tree /var/lib/docker/overlay2/1e520f13bb5e4cc2ba6776c164c59777f35d00ed7a489e061deeec140293c351/diff/

/var/lib/docker/overlay2/1e520f13bb5e4cc2ba6776c164c59777f35d00ed7a489e061deeec140293c351/diff/

├── file

├── opt

│ └── anaconda-post.log

└── root

2 directories, 2 files

[root@vms101 ~]# md5sum file

md5sum: file: No such file or directory

[root@vms101 ~]# md5sum /var/lib/docker/overlay2/1e520f13bb5e4cc2ba6776c164c59777f35d00ed7a489e061deeec140293c351/diff/file

2f282b84e7e608d5852449ed940bfc51 /var/lib/docker/overlay2/1e520f13bb5e4cc2ba6776c164c59777f35d00ed7a489e061deeec140293c351/diff/file

# 从宿主机复制文件到容器

[root@vms101 ~]# docker cp testapp/index.html 4d7d:/data

|

数据卷的特点:

- 数据卷是目录或者文件,并且可以在多个容器之间共享

- 对数据卷的更改在所有容器内会立即更新

- 数据卷的数据可以持久保存,即使删除使用该容器卷的容器也不影响

- 在容器里面写入数据不会影响镜像本身

数据卷使用场景:

- 日志输出

- 静态页面

- 应用配置文件

- 多容器间目录或文件共享

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

| # 挂载随机目录,Host会自动创建一个随机名称的volume

[root@vms101 ~]# docker run -d --name files -v /data centos:7.9.2009

4d7d7b6ebee8207a9b24964a76224c4be2853fbda9797d63dadfae5a0d89b374

[root@vms101 ~]# docker inspect 4d7d |grep -A5 Mounts

"Mounts": [

{

"Type": "volume",

"Name": "83c5ae40340c360db9cf11c79f7ccd363a981b18a2e664c22b1d9105cac370ba",

"Source": "/var/lib/docker/volumes/83c5ae40340c360db9cf11c79f7ccd363a981b18a2e664c22b1d9105cac370ba/_data",

"Destination": "/data",

# 查看数据卷

[root@vms101 ~]# docker volume ls

DRIVER VOLUME NAME

local 83c5ae40340c360db9cf11c79f7ccd363a981b18a2e664c22b1d9105cac370ba

# 创建数据卷

[root@vms101 ~]# docker volume create httpd-vol

httpd-vol

# 查看数据卷

[root@vms101 ~]# docker volume ls

DRIVER VOLUME NAME

local 83c5ae40340c360db9cf11c79f7ccd363a981b18a2e664c22b1d9105cac370ba

local httpd-vol

# 数据卷的信息

[root@vms101 ~]# docker volume inspect httpd-vol

[

{

"CreatedAt": "2023-05-11T23:47:10+08:00",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/httpd-vol/_data",

"Name": "httpd-vol",

"Options": null,

"Scope": "local"

}

]

[root@vms101 ~]# docker volume inspect 83c5ae40340c360db9cf11c79f7ccd363a981b18a2e664c22b1d9105cac370ba

[

{

"CreatedAt": "2023-05-11T23:28:03+08:00",

"Driver": "local",

"Labels": {

"com.docker.volume.anonymous": ""

},

"Mountpoint": "/var/lib/docker/volumes/83c5ae40340c360db9cf11c79f7ccd363a981b18a2e664c22b1d9105cac370ba/_data",

"Name": "83c5ae40340c360db9cf11c79f7ccd363a981b18a2e664c22b1d9105cac370ba",

"Options": null,

"Scope": "local"

}

]

# 清理卷

[root@vms101 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4d7d7b6ebee8 centos:7.9.2009 "/bin/bash" 44 minutes ago Exited (0) 44 minutes ago files

[root@vms101 ~]# docker rm -f 4d7d

4d7d

[root@vms101 ~]# docker volume rm 83c5ae40340c360db9cf11c79f7ccd363a981b18a2e664c22b1d9105cac370ba -f

83c5ae40340c360db9cf11c79f7ccd363a981b18a2e664c22b1d9105cac370ba

|

绑定挂载

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| # 创建访问文件

[root@vms101 ~]# mkdir testapp

[root@vms101 ~]# echo "<p><h1>Test APP Page.</h1></p>" > testapp/index.html

[root@vms101 ~]# docker pull httpd

Using default tag: latest

latest: Pulling from library/httpd

a2abf6c4d29d: Already exists

dcc4698797c8: Pull complete

41c22baa66ec: Pull complete

67283bbdd4a0: Pull complete

d982c879c57e: Pull complete

Digest: sha256:0954cc1af252d824860b2c5dc0a10720af2b7a3d3435581ca788dff8480c7b32

Status: Downloaded newer image for httpd:latest

docker.io/library/httpd:latest

# 使用-v参数将目标目录挂载给容器

[root@vms101 ~]# docker run -d --name web -v /root/testapp/:/usr/local/apache2/htdocs/ -p 80:80 httpd

[root@vms101 ~]# curl 127.0.0.1

<p><h1>Test APP Page.</h1></p>

# 删除容器,数据仍然保留

[root@vms101 ~]# docker rm -f 538

538

[root@vms101 ~]# ll testapp/

total 4

-rw-r--r--. 1 root root 51 May 11 23:10 index.html

|

容器管理卷

数据卷容器可以让数据在多个容器之间共享,需要先创建一个后台运行的容器作为Server用于提供数据存储服务,其他使用此卷的容器作为Client。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

| # 创建数据存储容器

[root@vms101 ~]# docker run --name data -it -v /data --rm busybox

Unable to find image 'busybox:latest' locally

latest: Pulling from library/busybox

5cc84ad355aa: Pull complete

Digest: sha256:5acba83a746c7608ed544dc1533b87c737a0b0fb730301639a0179f9344b1678

Status: Downloaded newer image for busybox:latest

/ #

# 新开一个终端来查看容器属性

[root@vms101 ~]# docker inspect -f {{.Mounts}} data

[{volume dd641a30fbca54f88a6c8e7057e79a5af057cb3e6120b8d521bc3f52d6b743c7 /var/lib/docker/volumes/dd641a30fbca54f88a6c8e7057e79a5af057cb3e6120b8d521bc3f52d6b743c7/_data /data local true }]

# 复制文件到存储容器

[root@vms101 ~]# docker cp testapp/index.html data:/data

Successfully copied 2.05kB to data:/data

[root@vms101 ~]# docker exec data ls /data/testapp

index.html

# 创建客户端容器,指定卷来自于上述的data容器

[root@vms101 ~]# docker run --name client --volumes-from data -it --rm busybox

/ # ls

bin data dev etc home proc root sys tmp usr var

/ # ls /data

testapp

/ # ls /data/testapp/

index.html

# 已关闭存储容器可以再创建客户端容器,已删除存储容器则不能再创建客户端容器,但已经存在的客户端容器不受影响,本质是卷的自动挂载。

# 自动删除容器卷

docker rm -v -f 容器ID

|

网络部分

Docker服务安装完成之后,默认在宿主机会生成一个名称为docker0的网桥。Docker0是一个二层的网络设备,通过网桥可以将Linux支持的不同端口连接起来,其IP地址为172.17.0.1/16,并生成三种类型的网络:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

| [root@vms101 ~]# ip a

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:5e:4e:37:81 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:5eff:fe4e:3781/64 scope link

valid_lft forever preferred_lft forever

[root@vms101 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4645c608490f bridge bridge local

d24bbf5a7ca6 host host local

bbc3fc5e53b4 none null local

# 拉起一个容器之后的网络

[root@vms101 ~]# docker run -d --name web -p 8080:80 nginx

[root@vms101 ~]# ip a

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:16:d2:ae:dc brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:16ff:fed2:aedc/64 scope link

valid_lft forever preferred_lft forever

5: veth2344e4d@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether fe:a1:6c:51:73:87 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::fca1:6cff:fe51:7387/64 scope link

valid_lft forever preferred_lft forever

[root@vms101 ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.024216d2aedc no veth2344e4d

[root@vms101 ~]# docker run -d --name web1 -p 81:80 nginx

ea153bf7bf6a95f58e46d902d0113c1ce21a1fd1cd8e7004cfdac3e6385523c0

[root@vms101 ~]# docker run -d --name web2 -p 82:80 nginx

096113318c74e3a109451c938fd52f3f1dd48ba146760ce2f8c23de017feb390

[root@vms101 ~]# docker inspect -f {{.NetworkSettings.IPAddress}} web1

172.17.0.3

[root@vms101 ~]# docker inspect -f {{.NetworkSettings.IPAddress}} web2

172.17.0.4

|

每次新建容器,宿主机就会多一个虚拟网卡,和容器的网卡组成一个网卡,比如veth2344e4d@if4,而在容器内的网卡名为if4。容器会自动获取一个172.17.0.0、16的网段随机地址,默认从172.17.0.2开始。容器获取的地址并不固定,每次重启容器,可能会发生地址变化。该网络设备类型为veth pair,是种成对出现的虚拟网络设备,用于解决网络命名空间之间的隔离。

1

2

3

4

5

6

7

8

9

10

11

12

| [root@5926763045b4 /]# ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.094 ms

--- 172.17.0.3 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.094/0.094/0.094/0.000 ms

[root@5926763045b4 /]# ping 172.17.0.4

PING 172.17.0.4 (172.17.0.4) 56(84) bytes of data.

64 bytes from 172.17.0.4: icmp_seq=1 ttl=64 time=0.090 ms

--- 172.17.0.4 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.070/0.080/0.090/0.010 ms

|

修改网桥的网络配置

通过配置Docker的daemon.json来修改

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| [root@vms101 ~]# cat /etc/docker/daemon.json

{

"bip": "192.168.100.1/24"

"registry-mirrors": ["https://37y8py0j.mirror.aliyuncs.com"]

}

[root@vms101 ~]# systemctl daemon-reload

[root@vms101 ~]# systemctl restart docker

[root@vms101 ~]# ip a

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:16:d2:ae:dc brd ff:ff:ff:ff:ff:ff

inet 192.168.100.1/24 brd 192.168.100.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:16ff:fed2:aedc/64 scope link

valid_lft forever preferred_lft forever

|

修改docker服务的配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| [root@vms101 ~]# vim /etc/systemd/system/multi-user.target.wants/docker.service

[Service]

Type=notify

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --bip=192.168.100.2/24

[root@vms101 ~]# systemctl daemon-reload

[root@vms101 ~]# systemctl restart docker

[root@vms101 ~]# ip a

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:16:d2:ae:dc brd ff:ff:ff:ff:ff:ff

inet 192.168.100.2/24 brd 192.168.100.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:16ff:fed2:aedc/64 scope link

valid_lft forever preferred_lft forever

|

修改默认网桥

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| [root@vms101 ~]# yum install -y bridge-utils

Package bridge-utils-1.5-9.el7.x86_64 already installed and latest version

Nothing to do

[root@vms101 ~]# brctl addbr br0

[root@vms101 ~]# ip a a 192.168.100.1/24 dev br0

[root@vms101 ~]# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.000000000000 no

docker0 8000.024216d2aedc no

[root@vms101 ~]# ip a

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:16:d2:ae:dc brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:16ff:fed2:aedc/64 scope link

valid_lft forever preferred_lft forever

14: br0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether e2:73:fe:78:07:72 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.1/24 scope global br0

valid_lft forever preferred_lft forever

# 修改docker配置文件

[root@vms101 ~]# vim /etc/systemd/system/multi-user.target.wants/docker.service

[Service]

Type=notify

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock -b br0

[root@vms101 ~]# systemctl daemon-reload

[root@vms101 ~]# systemctl restart docker

[root@vms101 ~]# docker run --rm centos:7.9.2009 hostname -i

192.168.100.2

|

容器间网络连接

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| [root@vms101 ~]# docker run -d -it --name web0 -p 80:80 nginx

54cca62ffbe9fc59f4e7474d0a481136cdbfca68eb677e892c9b98673bc4842d

[root@vms101 ~]# docker run -d -it --name web1 -p 81:80 --link web0 nginx

824595bdb5e4991a506f5b3cb87e4fe88c08696df1be0ab4988c50b89be53bae

[root@vms101 ~]# docker exec -it web1 cat /etc/hosts

172.17.0.2 web0 54cca62ffbe9

172.17.0.3 824595bdb5e4

[root@vms101 ~]# docker run -d -it --name web2 -p 82:80 --link web0 --link web1 nginx

51a303ad5da9476dd1b878064ff927f3fd49511e32b27c10785799d2ecc82a60

[root@vms101 ~]# docker exec -it web2 cat /etc/hosts

172.17.0.2 web0 54cca62ffbe9

172.17.0.3 web1 824595bdb5e4

172.17.0.4 51a303ad5da9

[root@vms101 ~]# docker run -d -it --name web3 -p 83:80 --link web0 --link web1 --link web2 centos:7.9.2009

277807e81bb92a66e1696af982467f66dda719b6e4a3ae2e0499aeabdcba16b5

[root@vms101 ~]# docker exec -it web3 cat /etc/hosts

172.17.0.2 web0 54cca62ffbe9

172.17.0.3 web1 824595bdb5e4

172.17.0.4 web2 51a303ad5da9

172.17.0.5 277807e81bb9

[root@vms101 ~]# docker exec -it web3 /bin/bash

[root@277807e81bb9 /]# ping web0

PING web0 (172.17.0.2) 56(84) bytes of data.

64 bytes from web0 (172.17.0.2): icmp_seq=1 ttl=64 time=0.105 ms

--- web0 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.060/0.082/0.105/0.024 ms

[root@277807e81bb9 /]# ping web2

PING web2 (172.17.0.4) 56(84) bytes of data.

64 bytes from web2 (172.17.0.4): icmp_seq=1 ttl=64 time=0.176 ms

--- web2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.061/0.118/0.176/0.058 ms

|

网络模式

1

2

| [root@vms101 ~]# docker run --network

bridge container: host ipvlan macvlan none null overlay

|

Docker容器一共有7种网络模式:

- bridge模式:默认模式,为每个容器创建自己的网络信息,并将容器连接到一个虚拟网桥与外界通信通过SNAT访问外网,也使用DNAT让容器被外部访问;

- 网络资源隔离

- 无需手动配置

- 可访问外网

- 外部主机无法直接访问容器,需要配置DNAT

- 性能较低

- 端口管理繁琐

- Host模式:容器直接使用宿主机Host的网卡和IP地址,网络性能最高,但各个容器内使用端口不能相同,适用于端口固定的模式,各个容器网络无隔离

- container模式:与已有容器共享一个网络,与宿主机网络隔离,适合频繁的容器间网络通信,容器进程通过lo网卡进行通信

- ipvlan模式:容器使用基于IP的VLAN模式

- macvlan模式:容器使用基于Mac的VLAN模式

- none/null模式:容器不会进行任何网络配置,无法与外界通信

- overlay模式:基于多宿主机和多个容器之间构建分布式的加密网络

配置非HTTPS镜像库

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

| # 下载仓库镜像

[root@vms101 ~]# docker pull registry

# 配置docker服务,使用http而不是默认的https

[root@vms101 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://37y8py0j.mirror.aliyuncs.com"],

"insecure-registries": ["172.16.10.101:5000"]

}

[root@vms101 ~]# systemctl daemon-reload

[root@vms101 ~]# systemctl restart docker

# 配置客户端

[root@vms100 ~]# docker pull hello-world

[root@vms100 ~]# docker tag hello-world:latest 172.16.10.101:5000/cka/helloworld:v1

[root@vms100 ~]# docker push 172.16.10.101:5000/cka/helloworld:v1

The push refers to repository [172.16.10.101:5000/cka/helloworld]

e07ee1baac5f: Pushed

v1: digest: sha256:f54a58bc1aac5ea1a25d796ae155dc228b3f0e11d046ae276b39c4bf2f13d8c4 size: 525

# 编写查看registry已有镜像

[root@vms100 ~]# cat list_img.sh

#!/bin/bash

file=$(mktemp)

curl -s $1:5000/v2/_catalog | jq | egrep -v '\{|\}|\[|]' | awk -F\" '{print $2}' > $file

while read aa ; do

tag=($(curl -s $1:5000/v2/$aa/tags/list | jq | egrep -v '\{|\}|\[|]|name' | awk -F\" '{print $2}'))

for i in ${tag[*]} ; do

echo $1:5000/${aa}:$i

done

done < $file

rm -rf $file

[root@vms100 ~]# chmod +x list_img.sh

[root@vms100 ~]# ./list_img.sh 172.16.10.101

172.16.10.101:5000/cka/centos7:v1

172.16.10.101:5000/cka/helloworld:v1

172.16.10.101:5000/rhce8/mysql:v1

172.16.10.101:5000/rhce8/wordpress:v1

|

单机编排Compose

Docker-compose是容器的一种单机编排工具,用以解决容器的依赖关系,替代docker命令对容器进行创建、启动、停止等手工操作。