Kubernetes高级概念 | Word Count: 4.6k | Reading Time: 24mins | Post Views:

安全管理 kubeconfig文件是指用于登陆的文件,默认是指~/.kube/config。这个文件是在刚装好kubernetes集群后按提示复制过来的,它和/etc/kubernetes/admin.conf是一样的,里面包括了集群的地址、admin用户以及各种证书和密钥的信息。创建kubernetes集群时,文件中的admin已被授予最大权限。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 # 只要有了admin.conf文件就可管理集群 [root@kmaster ~]# sz /etc/kubernetes/admin.conf # Windows系统部署kubectl也可管理 PowerShell 7.3.7 PS C:\Users\root> kubectl get nodes --kubeconfig=admin.conf NAME STATUS ROLES AGE VERSION kmaster Ready control-plane,master 35d v1.21.14 knode1 Ready worker1 35d v1.21.14 knode2 Ready worker2 35d v1.21.14 [root@knode2 ~]# kubectl get nodes --kubeconfig=admin.conf NAME STATUS ROLES AGE VERSION kmaster Ready control-plane,master 35d v1.21.14 knode1 Ready worker1 35d v1.21.14 knode2 Ready worker2 35d v1.21.14 # 添加变量KUBECONFIG [root@knode2 ~]# export KUBECONFIG=./admin.conf [root@knode2 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION kmaster Ready control-plane,master 35d v1.21.14 knode1 Ready worker1 35d v1.21.14 knode2 Ready worker2 35d v1.21.14

创建kubeconfig文件 除了复制kubeconfig文件之外,还可以自定义一个kubeconfig文件,并对这个自定义文件进行授权。创建kubeconfig文件,需要一个私钥以及集群CA授权颁发的证书。

申请证书 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 # 创建测试环境 [root@kmaster ~]# mkdir role ; cd role [root@kmaster role]# kubectl create ns nsrole namespace/nsrole created [root@kmaster role]# kubens nsrole Context "kubernetes-admin@kubernetes" modified. Active namespace is "nsrole". # 生成私钥sujx.key [root@kmaster role]# openssl genrsa -out sujx.key 2048 Generating RSA private key, 2048 bit long modulus ..........................+++ ..................+++ e is 65537 (0x10001) # 根据私钥生成证书请求文件sujx.csr [root@kmaster role]# openssl req -new -key sujx.key -out sujx.csr -subj "/CN=sujx/O=cka2020" # 对证书文件请求文件进行base64 编码 [root@kmaster role]# cat sujx.csr |base64 |tr -d "\n" LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1pqQ0NBVTRDQVFBd0lURU5NQXNHQTFVRUF3d0VjM1ZxZURFUU1BNEdBMVVFQ2d3SFkydGhNakF5TURDQwpBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQU0zSzZ4S0l0ZWFxbHB0MVRrOUorS2krCmNIT2phT0ltTmtBcVQ5cVBPZWJDM2tjaWY1cHg2bUZyMU81WW9SRkNKQzN1L3VYdlFEMFFaQ2RuMVpCTkhpbU0KQmRnQ09NQndBTWJZK0d0QmthYXUwdzVmc1BYTzc1VWpITEQ2SUtneWhoRGZaa3g5THlFbzN5OEJYd2xwRWRoUApLS1UyMmMwc05SMDNVVjNIMHluNU03Yzg2K3BkNzlxQTlPWkdKUmxnVUVvYTErNUZIQmJqTHE5bGxxcGNrNitQCkxPaVRQeGF6TXZ2MEZRbElRMjd0c0p0S0lpZkp5QjREYm5aYllNVXg1cFIwSEI3Y1ZqWkFma00yK1F5Zk5jdy8KYVEyUXIrcUZIU09wRjRKVjNSamU4UUUrb1FxTjc0aFRDcEFzUWlYUGEwWExuN1JXRlNudDJhcWs5OWNkUklNQwpBd0VBQWFBQU1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQkprWmZIUWdXUzZ0TGJ5aVdyZ2lYYUVJNFByVXhxCnROZGxScGxranVWRm1uRHJwYWxmUU1UN3cycHBvREI5ZjIvMDR1dUp5MGNSZXlCcktRaWRkaHR5V2h1bGtKelUKckNiOWJGaTlidnZOWmNGV21mdlFxaEZON1MxekswNFZSL1JvWUl6NVpBMEh5bmdXL3lDMXVUUElrR1IveWlKZwpuQkhnQ3d0VWtnTTIxRmFQQlExRWVZL0FyQ1pTZHlPZVRScXhYdUgrdEJiUGZFc1VkZXQ5REcrcUUwYlpuQ2d6CnhlZldSQlBpbXBXcEl5d2lrbWM3Z2RyVmpSd2Qva2l1OFl6c29iaWxETTJUNEZxeWZjMk9iMUhQS3R2V0I3WVIKYzE5bWd2Nk5McmxqT2NETVl1bURZb1BvazBPVE1NOVhZY1QxSmFCQzBQMUJhYnZ4Lzl2cmNNZVEKLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg==[root@kmaster role]# # 创建申请证书的yaml文件 [root@kmaster role]# cat sujx.yaml apiVersion: certificates.k8s.io/v1 kind: CertificateSigningRequest metadata: name: sujx spec: request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1pqQ0NBVTRDQVFBd0lURU5NQXNHQTFVRUF3d0VjM1ZxZURFUU1BNEdBMVVFQ2d3SFkydGhNakF5TURDQwpBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQU0zSzZ4S0l0ZWFxbHB0MVRrOUorS2krCmNIT2phT0ltTmtBcVQ5cVBPZWJDM2tjaWY1cHg2bUZyMU81WW9SRkNKQzN1L3VYdlFEMFFaQ2RuMVpCTkhpbU0KQmRnQ09NQndBTWJZK0d0QmthYXUwdzVmc1BYTzc1VWpITEQ2SUtneWhoRGZaa3g5THlFbzN5OEJYd2xwRWRoUApLS1UyMmMwc05SMDNVVjNIMHluNU03Yzg2K3BkNzlxQTlPWkdKUmxnVUVvYTErNUZIQmJqTHE5bGxxcGNrNitQCkxPaVRQeGF6TXZ2MEZRbElRMjd0c0p0S0lpZkp5QjREYm5aYllNVXg1cFIwSEI3Y1ZqWkFma00yK1F5Zk5jdy8KYVEyUXIrcUZIU09wRjRKVjNSamU4UUUrb1FxTjc0aFRDcEFzUWlYUGEwWExuN1JXRlNudDJhcWs5OWNkUklNQwpBd0VBQWFBQU1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQkprWmZIUWdXUzZ0TGJ5aVdyZ2lYYUVJNFByVXhxCnROZGxScGxranVWRm1uRHJwYWxmUU1UN3cycHBvREI5ZjIvMDR1dUp5MGNSZXlCcktRaWRkaHR5V2h1bGtKelUKckNiOWJGaTlidnZOWmNGV21mdlFxaEZON1MxekswNFZSL1JvWUl6NVpBMEh5bmdXL3lDMXVUUElrR1IveWlKZwpuQkhnQ3d0VWtnTTIxRmFQQlExRWVZL0FyQ1pTZHlPZVRScXhYdUgrdEJiUGZFc1VkZXQ5REcrcUUwYlpuQ2d6CnhlZldSQlBpbXBXcEl5d2lrbWM3Z2RyVmpSd2Qva2l1OFl6c29iaWxETTJUNEZxeWZjMk9iMUhQS3R2V0I3WVIKYzE5bWd2Nk5McmxqT2NETVl1bURZb1BvazBPVE1NOVhZY1QxSmFCQzBQMUJhYnZ4Lzl2cmNNZVEKLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg== signerName: kubernetes.io/kube-apiserver-client usages: - client auth # 申请证书 [root@kmaster role]# kubectl apply -f sujx.yaml certificatesigningrequest.certificates.k8s.io/sujx created # 需要管理员批准 [root@kmaster role]# kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION sujx 8s kubernetes.io/kube-apiserver-client kubernetes-admin Pending # 批准通过 [root@kmaster role]# kubectl certificate approve sujx certificatesigningrequest.certificates.k8s.io/sujx approved # 证书状态为可用 [root@kmaster role]# kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION sujx 45s kubernetes.io/kube-apiserver-client kubernetes-admin Approved,Issued # 导出证书文件为crt [root@kmaster role]# kubectl get csr/sujx -o jsonpath='{.status.certificate}' | base64 -d > sujx.crt # 创建集群角色并授权给sujx用户 [root@kmaster role]# kubectl create clusterrolebinding test1 --clusterrole=cluster-admin --user=sujx clusterrolebinding.rbac.authorization.k8s.io/test1 created

创建kubeconfig 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 # 创建kubeconfig模板 [root@kmaster role]# cat kc1.yaml apiVersion: v1 kind: Config preferences: {} clusters: - cluster: name: john users: - name: sujx contexts: - context: name: context1 namespace: default current-context: "context1" # 复制模板 [root@kmaster role]# cp kc1.yaml kc1 # 复制集群CA证书 [root@kmaster role]# cp /etc/kubernetes/pki/ca.crt ./ # 设置集群字段 [root@kmaster role]# kubectl config --kubeconfig=kc1 set-cluster cluster1 --server=https://192.168.10.10:6443 --certificate-authority=ca.crt --embed-certs=true Cluster "cluster1" set. # 设置用户字段 [root@kmaster role]# kubectl config --kubeconfig=kc1 set-credentials sujx --client-certificate=sujx.crt --client-key=sujx.key --embed-certs=true User "sujx" set. # 设置上下文字段 [root@kmaster role]# kubectl config --kubeconfig=kc1 set-context context1 --cluster=cluster1 --namespace=default --user=sujx Context "context1" modified. # 测试 # 能否列出当前命名空间的pods [root@kmaster role]# kubectl auth can-i list pods --as sujx yes # 能否列出kube-system命名空间的pods [root@kmaster role]# kubectl auth can-i list pods -n kube-system --as sujx yes # 在其他nodes上是否能够管理集群 [root@kmaster role]# scp kc1 knode1:/root kc1 [root@knode1 ~]# kubectl --kubeconfig=kc1 get nodes NAME STATUS ROLES AGE VERSION kmaster Ready control-plane,master 21h v1.21.14 knode1 Ready <none> 21h v1.21.14 knode2 Ready <none> 21h v1.21.14

授权 kubernetes的授权是基于RBAC(基于角色的访问控制)的方式,即并不会把权限授权给用户,而是把几个权限放在一个角色中,然后把角色赋权给用户。把角色授权给用户,这个授权叫做rolebinding。不管是role,还是rolebinding,都是基于命名空间的,也就是在那个命名空间中创建,就在那个命名空间中生效。

role和rolebinding 1 2 3 4 5 6 7 8 9 10 # 创建测试用命名空间 [root@kmaster role]# kubectl create ns ns1 namespace/ns1 created [root@kmaster role]# kubens ns1 Context "kubernetes-admin@kubernetes" modified. Active namespace is "ns1". [root@kmaster role]# kubectl create ns ns3 namespace/ns3 created [root@kmaster role]# kubectl create ns ns4 namespace/ns4 created

创建role 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 # 使用命令行创建模板yaml [root@kmaster role]# kubectl create role pod-reader --verb=get,watch,list --resource=pods --dry-run=client -o yaml > role1.yaml [root@kmaster role]# cat role1.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: creationTimestamp: null name: pod-reader rules: - apiGroups: - "" resources: - pods verbs: - get - watch - list # 创建用户角色 [root@kmaster role]# kubectl apply -f role1.yaml role.rbac.authorization.k8s.io/pod-reader created [root@kmaster role]# kubectl get role NAME CREATED AT pod-reader 2023-10-03T13:58:11Z # 查看用户角色的属性 [root@kmaster role]# kubectl describe role pod-reader Name: pod-reader Labels: <none> Annotations: <none> PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- pods [] [] [get watch list]

创建rolebinding 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 # 使用命令行创建 [root@kmaster role]# kubectl create rolebinding rbind1 --role=pod-reader --user=sujx rolebinding.rbac.authorization.k8s.io/rbind1 created [root@kmaster role]# kubectl get rolebindings NAME ROLE AGE rbind1 Role/pod-reader 41s [root@kmaster role]# kubectl describe rolebindings rbind1 Name: rbind1 Labels: <none> Annotations: <none> Role: Kind: Role Name: pod-reader Subjects: Kind Name Namespace ---- ---- --------- User sujx [root@kmaster role]# kubectl delete rolebinding rbind1 rolebinding.rbac.authorization.k8s.io "rbind1" deleted # 使用yaml文件创建 [root@kmaster role]# kubectl create rolebinding rbind1 --role=pod-reader --user=sujx --dry-run=client -o yaml > role1binding.yaml [root@kmaster role]# cat role1binding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: creationTimestamp: null name: rbind1 roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: pod-reader subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: sujx [root@kmaster role]# kubectl apply -f role1binding.yaml rolebinding.rbac.authorization.k8s.io/rbind1 created [root@kmaster role]# kubectl get rolebindings NAME ROLE AGE rbind1 Role/pod-reader 6s [root@kmaster role]# kubectl describe rolebindings rbind1 Name: rbind1 Labels: <none> Annotations: <none> Role: Kind: Role Name: pod-reader Subjects: Kind Name Namespace ---- ---- --------- User sujx

clusterrole和clusterrolebinding role和rolebinding只能作用于一个具体的命名空间,如果想要角色在所有的命名空间中生效,则需要clusterrole和clusterrolebinding。

创建clusterrole 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 # 使用yaml文件创建clusterrole [root@kmaster role]# kubectl create clusterrole pod-reader --verb=get,watch,list --resource=deploy --dry-run=client -o yaml > clusterrole1.yaml [root@kmaster role]# cat clusterrole1.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: creationTimestamp: null name: pod-reader rules: - apiGroups: - apps resources: - deployments verbs: - get - watch - list [root@kmaster role]# kubectl apply -f clusterrole1.yaml clusterrole.rbac.authorization.k8s.io/pod-reader created [root@kmaster role]# kubectl get clusterrole NAME CREATED AT admin 2023-10-02T16:05:57Z calico-kube-controllers 2023-10-02T16:08:14Z calico-node 2023-10-02T16:08:14Z cluster-admin 2023-10-02T16:05:57Z pod-reader 2023-10-03T14:18:32Z # 使用命令行创建clusterrole [root@kmaster role]# kubectl create clusterrole pod-reader --verb=read,list,get --resource=deploy

创建clusterrolebinding 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root@kmaster role]# kubectl create clusterrolebinding cbind1 --clusterrole=pod-reader --user=sujx --dry-run=client -o yaml > clusterrole1binding.yaml [root@kmaster role]# cat clusterrole1binding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: creationTimestamp: null name: cbind1 roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: pod-reader subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: sujx [root@kmaster role]# kubectl apply -f clusterrole1binding.yaml clusterrolebinding.rbac.authorization.k8s.io/cbind1 created [root@kmaster role]# kubectl get clusterrolebindings NAME ROLE cbind1 ClusterRole/pod-reader

service account 用户登陆k8s的账号称为user account,指定pod里面的进程以什么账号运行,则使用service account,简称sa。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 # 当前sa账号 [root@kmaster role]# kubectl get sa NAME SECRETS AGE default 1 22h # 创建sa:app1 [root@kmaster role]# kubectl create sa app1 serviceaccount/app1 created [root@kmaster role]# kubectl get sa NAME SECRETS AGE app1 1 10s default 1 22h # 创建sa的同时会自动创建secret [root@kmaster role]# kubectl get secrets NAME TYPE DATA AGE app1-token-wlfrf kubernetes.io/service-account-token 3 33s default-token-slzfv kubernetes.io/service-account-token 3 22h [root@kmaster role]# kubectl get secrets |grep app1 app1-token-wlfrf kubernetes.io/service-account-token 3 41s # 创建测试用deploy,镜像为nginx,副本数为3 [root@kmaster role]# kubectl create deployment web1 --image=nginx --replicas=3 --dry-run=client -o yaml > web1.yaml [root@kmaster role]# kubectl apply -f web1.yaml deployment.apps/web1 created [root@kmaster role]# kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE web1 3/3 3 1 25s # 将web1的pod进程修改为app1的sa账号运行 [root@kmaster role]# kubectl set sa deploy web1 app1 deployment.apps/web1 serviceaccount updated # k8s自动重启pod [root@kmaster role]# kubectl get pods NAME READY STATUS RESTARTS AGE web1-55d5cd6df-r6fsr 1/1 Running 0 7s web1-55d5cd6df-wgqf8 1/1 Running 0 8s web1-55d5cd6df-xzjx2 1/1 Running 0 5s web1-5bfb6d8dcc-889qq 0/1 Terminating 0 68s # 运行账号修改为app1 [root@kmaster role]# kubectl describe deploy web1 |grep -i account Service Account: app1

资源限制 容器和pod默认会把物理计算资源作为全部可使用资源,这里就需要对其进行限制。通常使用pod里面的resources字段、limitrange、resourcequota字段来限制。

resources 在定义pod的yaml文件中,可以通过设置resoucres选项来定义容器所需要消耗最多或者最小的CPU和内存资源,request设置了给容器的最低配置,limits是设置的资源上限。

所使用工具为:memload ,使用方式为memload [需要占用内存值]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 # 设置pod1的内存资源要求为100Gi为最低要求 [root@kmaster role]# cat pod1.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod1 name: pod1 spec: containers: - image: centos imagePullPolicy: IfNotPresent name: pod1 command: ['sh','-c','sleep 5000'] resources: requests: cpu: 50m memory: 100Gi limits: cpu: 100m memory: 200Gi # 部署pod1 [root@kmaster role]# kubectl apply -f pod1.yaml pod/pod1 created # pod的状态一直为Pending状态,因为所有Node均不满足资源限制要求 [root@kmaster role]# kubectl get pods NAME READY STATUS RESTARTS AGE pod1 0/1 Pending 0 3s # 查看pod属性 [root@kmaster role]# kubectl describe pod pod1 Name: pod1 Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 17s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 Insufficient memory. # 内存不满足要求 Warning FailedScheduling 16s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 Insufficient memory. # 清理pod1 [root@kmaster role]# kubectl delete pod pod1 pod "pod1" deleted # 将资源限制的100Gi修改为100Mi,200Gi修改为200Mi [root@kmaster role]# kubectl apply -f pod1.yaml pod/pod1 created [root@kmaster role]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE pod1 1/1 Running 0 12s 10.244.69.228 knode1 # 释放knode1缓存 [root@knode1 ~]# echo 3 > /proc/sys/vm/drop_caches [root@knode1 ~]# free -h total used free shared buff/cache available Mem: 3.8G 1.3G 2.1G 14M 472M 2.3G # 执行内存压力测试 # 安装测试工具memload root@kmaster role]# kubectl cp memload-7.0-1.r29766.x86_64.rpm pod1:/root [root@kmaster role]# kubectl exec -it pod1 -- bash [root@pod1 /]# cd [root@pod1 ~]# rpm -i memload-7.0-1.r29766.x86_64.rpm # 第一次占用200M内存,超过上限被killed [root@pod1 ~]# memload 200 Attempting to allocate 200 Mebibytes of resident memory... Killed # 第二次直接占用100M内存 [root@pod1 ~]# memload 100 Attempting to allocate 100 Mebibytes of resident memory... # 主机内存使用增加1G [root@knode1 ~]# free -h total used free shared buff/cache available Mem: 3.8G 1.4G 2.0G 14M 481M 2.2G

limitrange limitrange是kubernetes提供的资源限制功能,可以限制pod或者容器使用的cpu、内存资源,以及pvc能够提供的空间资源。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 # 限制所有容器内存最低使用250M,最高使用512M [root@kmaster role]# cat limit.yaml apiVersion: v1 kind: LimitRange metadata: name: mem-limit-range spec: limits: - max: memory: 512Mi min: memory: 250Mi type: Container [root@kmaster role]# kubectl apply -f limit.yaml limitrange/mem-limit-range created [root@kmaster role]# kubectl get limitranges NAME CREATED AT mem-limit-range 2023-10-04T11:28:16Z [root@kmaster role]# kubectl describe limitranges mem-limit-range Name: mem-limit-range Namespace: default Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio ---- -------- --- --- --------------- ------------- ----------------------- Container memory 250Mi 512Mi 512Mi 512Mi

resourcesquota resoucesquota是限制命名空间能够调用多大资源,例如多少个svc、多少个pod

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 # 部署一个3节点的nginx deployment [root@kmaster role]# kubectl apply -f deploy.yaml deployment.apps/web1 created [root@kmaster role]# kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE web1 3/3 3 3 9s # 创建资源配额yaml文件,设定pod只能有4个,svc只能有2个 [root@kmaster role]# cat resource.yaml apiVersion: v1 kind: ResourceQuota metadata: name: computer-resources spec: hard: pods: "4" services: "2" [root@kmaster role]# kubectl apply -f resource.yaml resourcequota/computer-resources created [root@kmaster role]# kubectl get resourcequotas NAME AGE REQUEST LIMIT computer-resources 9s pods: 5/4, services: 1/2 [root@kmaster role]# kubectl scale deployment web1 --replicas=10 deployment.apps/web1 scaled # 扩展10个,最后只能建立4个 [root@kmaster role]# kubectl get pods NAME READY STATUS RESTARTS AGE web1-5bfb6d8dcc-5zllb 1/1 Running 0 8m50s web1-5bfb6d8dcc-jfbbd 1/1 Running 0 8m50s web1-5bfb6d8dcc-qrxfw 1/1 Running 0 8m50s web1-5bfb6d8dcc-zflrn 1/1 Running 0 2s # 创建名为svc1的服务 [root@kmaster role]# kubectl expose deployment web1 --name=svc1 --port=80 service/svc1 exposed # 由于default空间有默认存在一个svc kuberentes,所以只能创建1个svc [root@kmaster role]# kubectl expose deployment web1 --name=svc2 --port=80 Error from server (Forbidden): services "svc2" is forbidden: exceeded quota: computer-resources, requested: services=1, used: services=2, limited: services=2 [root@kmaster role]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) kubernetes ClusterIP 10.96.0.1 <none> 443/TCP svc1 ClusterIP 10.103.175.155 <none> 80/TCP

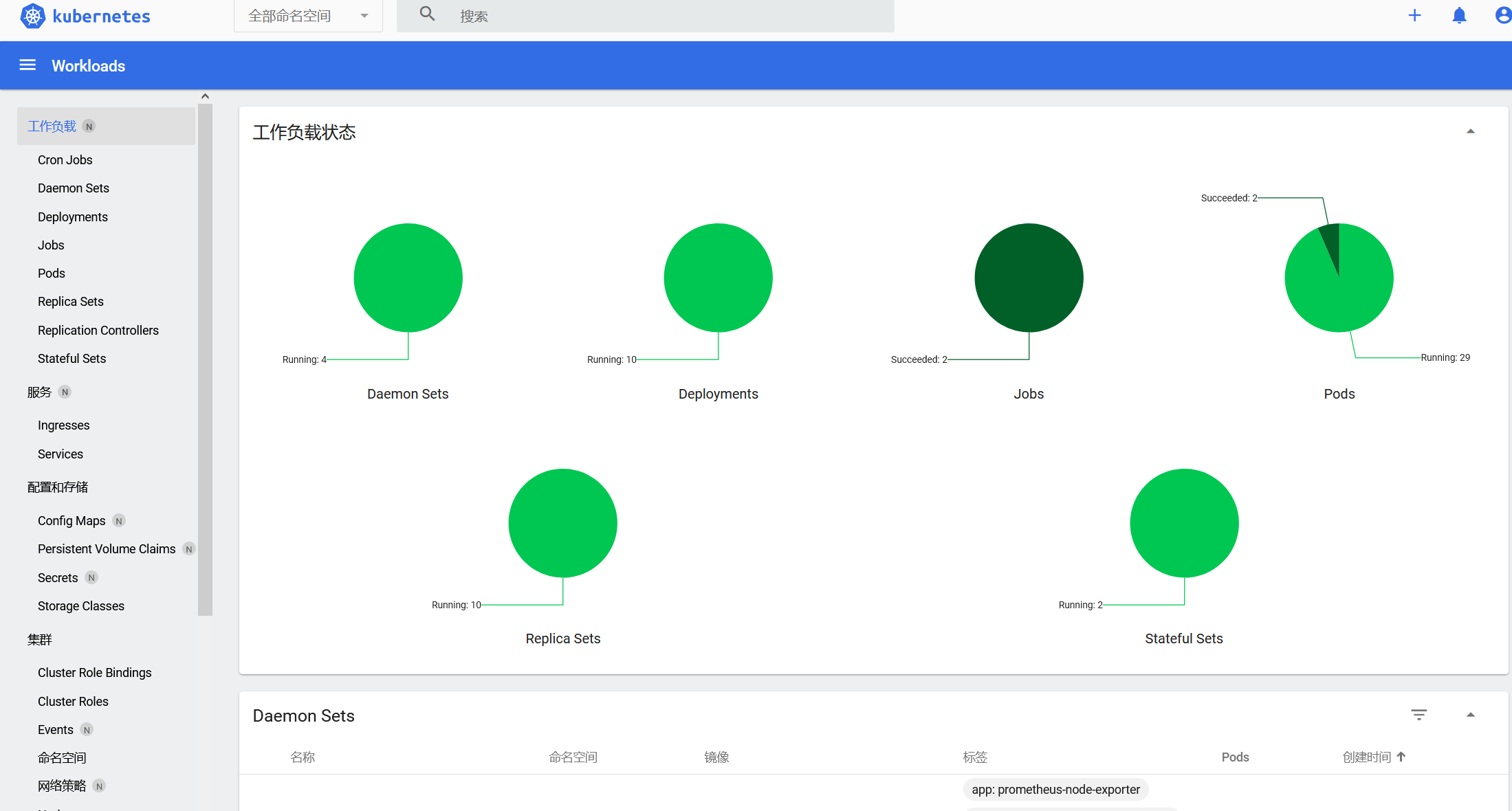

面板管理 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 # 镜像准备,在所有节点上下载镜像 [root@kmaster ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml # 将镜像的下载策略从Always修改为IfNotPresent [root@kmaster ~]# grep image recommended.yaml image: kubernetesui/dashboard:v2.4.0 imagePullPolicy: IfNotPresent image: kubernetesui/metrics-scraper:v1.0.7 imagePullPolicy: IfNotPresent [root@kmaster ~]# docker pull kubernetesui/dashboard:v2.4.0 [root@kmaster ~]# docker pull kubernetesui/metrics-scraper:v1.0.7 # 部署面板 [root@kmaster ~]# kubectl apply -f recommended.yaml # 将svc的网络映射类型从ClusterIP修改为LoadBanacer [root@kmaster ~]# kubectl edit svc -n kubernetes-dashboard kubernetes-dashboard spec: …… ports: - nodePort: 30001 port: 443 protocol: TCP targetPort: 8443 selector: k8s-app: kubernetes-dashboard sessionAffinity: None type: LoadBalancer # 创建sa账号admin-user [root@kmaster ~]# kubectl create serviceaccount admins-user --namespace=kubernetes-dashboard serviceaccount/admins-user created # 创建集群授权 [root@kmaster dashborad]# cat admin-clusterrolebing.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard [root@kmaster ~]# kubectl apply -f admin-clusterrolebing.yaml # 创建访问授权 [root@kmaster dashborad]# cat admin-bearerToken.yaml apiVersion: v1 kind: Secret metadata: name: admin-user namespace: kubernetes-dashboard annotations: kubernetes.io/service-account.name: "admin-user" type: kubernetes.io/service-account-token [root@kmaster dashborad]# kubectl apply -f admin-bearerToken.yaml # 提取访问token值 [root@kmaster dashborad]# kubectl get secret admin-user -n kubernetes-dashboard -o jsonpath={".data.token"} | base64 -d eyJhbGciOiJSUzI1NiIsImtpZCI6ImwtQURRdGk4cjU5bDRITnNDX3k5aEJ1NVdpeDRGWndTUkJKZzB3eEhVaHcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIzOTVmZWIwYi03MWFlLTQ1NDUtYTI1ZC00ZWY5OTRmNjg4ZTMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.RQP34_HmghqumeH_plQKhGCcVo-LqQhe0fkEgnAbFxyEZ2m9X5cMCFjHJyp9RTRkpNIsD1nsD-6xDNIdZz-UOlwjqTXSDJJgRgxMeps4H9N61b83gqizaDPC8aYuEtucnt6zk2IpW5rbQwGJ6eM2m2gGVTNttljtgwVc9tmKlhwI8NcZAqlPN1hsC85_lfmCXzPXXokZgraS55XFJgwJ6cUt83ikSnRny3vLL3L4gZgQovQL_wr2iqvCmL7TGkggErfAAYrZBt1npB0xbyxhrJPerNZjEjlzpZjzAbFoZej62fzO_idHrLkJ5c68YyBT8HDpB2QPqrRgNV8E2M858w[root@kmaster dashborad]# # 其他获取token的方式 [root@kmaster dashborad]# kubectl get secrets -n kubernetes-dashboard NAME TYPE DATA AGE admin-user kubernetes.io/service-account-token 3 32m admin-user-token-qt7fc kubernetes.io/service-account-token 3 36m default-token-79x76 kubernetes.io/service-account-token 3 16h kubernetes-dashboard-certs Opaque 0 16h kubernetes-dashboard-csrf Opaque 1 16h kubernetes-dashboard-key-holder Opaque 2 16h kubernetes-dashboard-token-bjbc5 kubernetes.io/service-account-token 3 16h [root@kmaster dashborad]# kubectl describe secrets kubernetes-dashboard-token-bjbc5 Name: kubernetes-dashboard-token-bjbc5 Namespace: kubernetes-dashboard Labels: <none> Annotations: kubernetes.io/service-account.name: kubernetes-dashboard kubernetes.io/service-account.uid: 478aae44-ce08-4119-bc4f-012b37ecd49d Type: kubernetes.io/service-account-token Data ==== ca.crt: 1066 bytes namespace: 20 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImwtQURRdGk4cjU5bDRITnNDX3k5aEJ1NVdpeDRGWndTUkJKZzB3eEhVaHcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1iamJjNSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ3OGFhZTQ0LWNlMDgtNDExOS1iYzRmLTAxMmIzN2VjZDQ5ZCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.ohXCtpbV-p7uw81C-bX5clWAIxMSj2sHaiykzy1KWLT08-L88GprJ3MsqP1Y7kHYGtnIs98fvs48VGh1rRcjyCaO51gEpOmJmorESgqui15A166S8NHvZAuo7xAWNHQogkJnfjkmBdgUu2eYG4N88bKcR0hZPoz5bjmHo6hA0vB4_zWn2TOOv7msOen_rC3GzhAwGoxRXppmHx0xvu_8HKu1nSTbP4tn1MTXdCwZW2OGSWwJvM26pyFT8v3R2F22zRy_ilGMDdcxQPWvkXLbNO9nT6QH42ptYvBszjI1smVpuB_BpD9qEvYCbNx47LZvCbLK7Enj5UMpwpxH5ATD2w

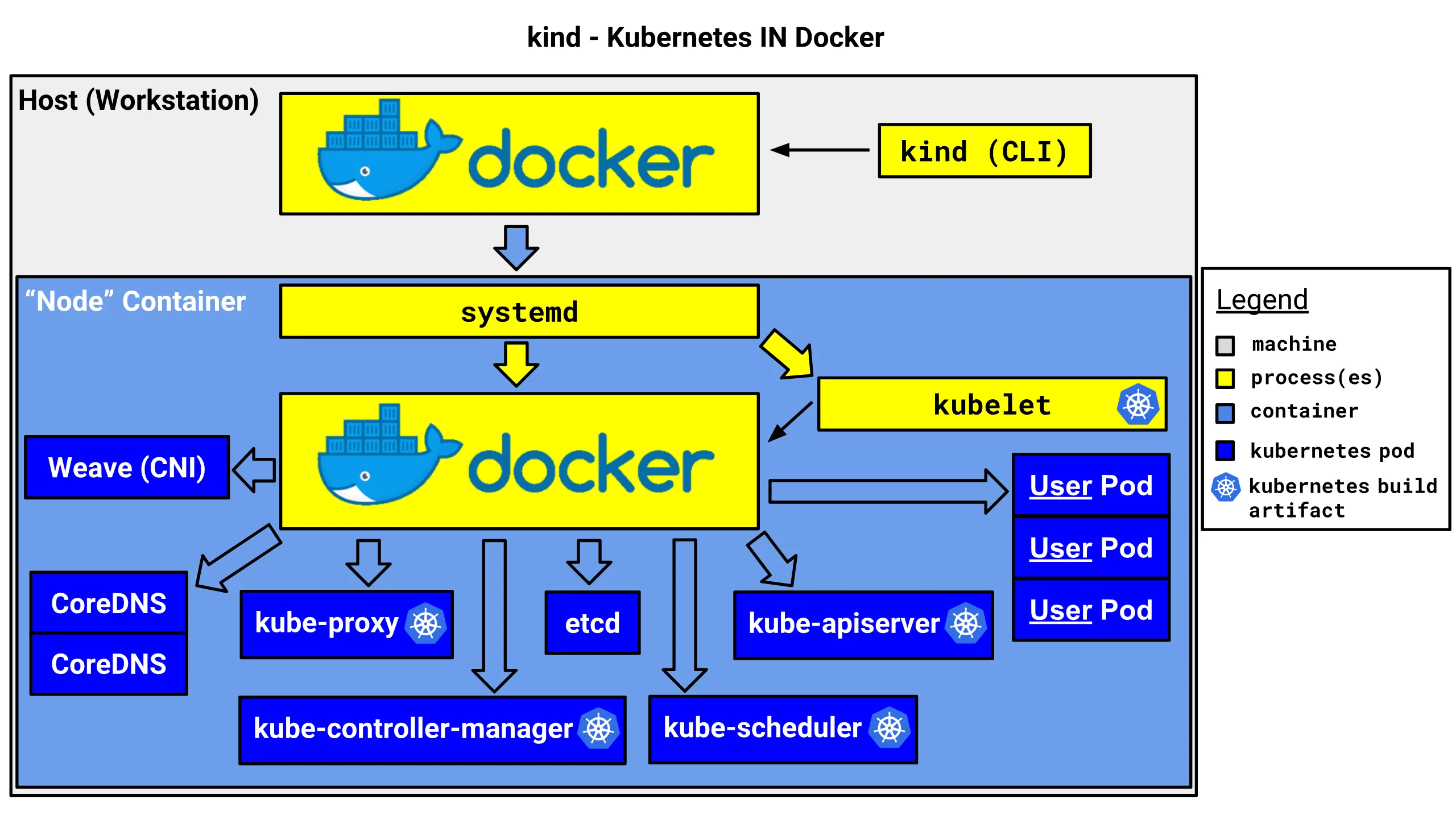

包管理 构建wordpress站点时需要为每个pod创建pv和pvc,然后分别创建应用的pod和svc,如果将所有步骤写在一个文件中,然后放到一个文件夹(chart)中,直接调用chart就可以一次性完成操作,那么创造出来的实例就是release。镜像就是chart,使用镜像创建的容器就是release,集中存储chart的仓库就是helm

安装helm helmv3安装在master上,是个和kubectl类似的客户端,是个使用kubernetes API的工具

1 2 3 4 5 6 7 8 9 10 11 12 13 14 # 获取helm二进制包 [root@kmaster helm]# curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 [root@kmaster helm]# chmod 700 get_helm.sh [root@kmaster helm]# ./get_helm.sh Downloading https://get.helm.sh/helm-v3.12.3-linux-amd64.tar.gz Verifying checksum... Done. Preparing to install helm into /usr/local/bin helm installed into /usr/local/bin/helm [root@kmaster helm]# helm version version.BuildInfo{Version:"v3.12.3", GitCommit:"3a31588ad33fe3b89af5a2a54ee1d25bfe6eaa5e", GitTreeState:"clean", GoVersion:"go1.20.7"} # 设置helm命令的自动补全 [root@kmaster helm]# helm completion bash > ~/.helmrc [root@kmaster helm]# echo "source ~/.helmrc" >> ~/.bashrc [root@kmaster helm]# source ~/.bashrc

仓库管理 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 # 安装应用就需要为helm添加repo # 查看现有仓库,当前为空 [root@kmaster helm]# helm repo list Error: no repositories to show # 添加官方仓库 [root@kmaster helm]# helm repo add stable https://charts.helm.sh/stable "stable" has been added to your repositories # 创建实验命名空间nshelm [root@kmaster helm]# kubectl create namespace nshelm namespace/nshelm created [root@kmaster helm]# kubens nshelm Context "kubernetes-admin@kubernetes" modified. Active namespace is "nshelm". # 检索仓库 [root@kmaster helm]# helm search repo mysql NAME CHART VERSION APP VERSION DESCRIPTION stable/mysql 1.6.9 5.7.30 DEPRECATED - Fast, reliable, scalable, and easy... stable/mysqldump 2.6.2 2.4.1 DEPRECATED! - A Helm chart to help backup MySQL... stable/prometheus-mysql-exporter 0.7.1 v0.11.0 DEPRECATED A Helm chart for prometheus mysql ex... stable/percona 1.2.3 5.7.26 DEPRECATED - free, fully compatible, enhanced, ... stable/percona-xtradb-cluster 1.0.8 5.7.19 DEPRECATED - free, fully compatible, enhanced, ... stable/phpmyadmin 4.3.5 5.0.1 DEPRECATED phpMyAdmin is an mysql administratio... stable/gcloud-sqlproxy 0.6.1 1.11 DEPRECATED Google Cloud SQL Proxy stable/mariadb 7.3.14 10.3.22 DEPRECATED Fast, reliable, scalable, and easy t...

部署mysql应用 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 [root@kmaster helm]# helm pull stable/mysql --version=1.6.8 [root@kmaster helm]# ls get_helm.sh mysql-1.6.8.tgz [root@kmaster helm]# tree mysql mysql ├── Chart.yaml ├── README.md ├── templates │ ├── configurationFiles-configmap.yaml │ ├── deployment.yaml │ ├── _helpers.tpl │ ├── initializationFiles-configmap.yaml │ ├── NOTES.txt │ ├── pvc.yaml │ ├── secrets.yaml │ ├── serviceaccount.yaml │ ├── servicemonitor.yaml │ ├── svc.yaml │ └── tests │ ├── test-configmap.yaml │ └── test.yaml └── values.yaml 2 directories, 15 files # 修改values.yaml中的数据库root密码,并创建tom账户,密码均为redhat,并创建数据库blog [root@kmaster helm]# cat mysql/values.yaml image: "mysql" imageTag: "5.7.30" strategy: type: Recreate busybox: image: "busybox" tag: "latest" testFramework: enabled: false image: "bats/bats" tag: "1.2.1" imagePullPolicy: IfNotPresent securityContext: {} mysqlRootPassword: redhat mysqlUser: tom mysqlPassword: redhat mysqlAllowEmptyPassword: false mysqlDatabase: blog …… # 使用helm安装mysql [root@kmaster helm]# cd mysql/ [root@kmaster mysql]# ls Chart.yaml README.md templates values.yaml [root@kmaster mysql]# helm install db . NAME: db LAST DEPLOYED: Wed Sep 27 20:44:48 2023 NAMESPACE: nshelm STATUS: deployed REVISION: 1 # 检查安装情况 [root@kmaster mysql]# helm ls NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION db nshelm 1 2023-09-27 20:44:48.534693697 +0800 CST deployed mysql-1.6.8 5.7.30 # 获取pod情况和访问IP [root@kmaster mysql]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP db-mysql-5b5c68c5c9-sf8vh 1/1 Running 0 52s 10.244.195.137 # 检查mysql部署情况 [root@kmaster mysql]# mysql -uroot -predhat -h10.244.195.137 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MySQL connection id is 10 Server version: 5.7.30 MySQL Community Server (GPL) Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MySQL [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | blog | | mysql | | performance_schema | | sys | +--------------------+ 5 rows in set (0.00 sec)

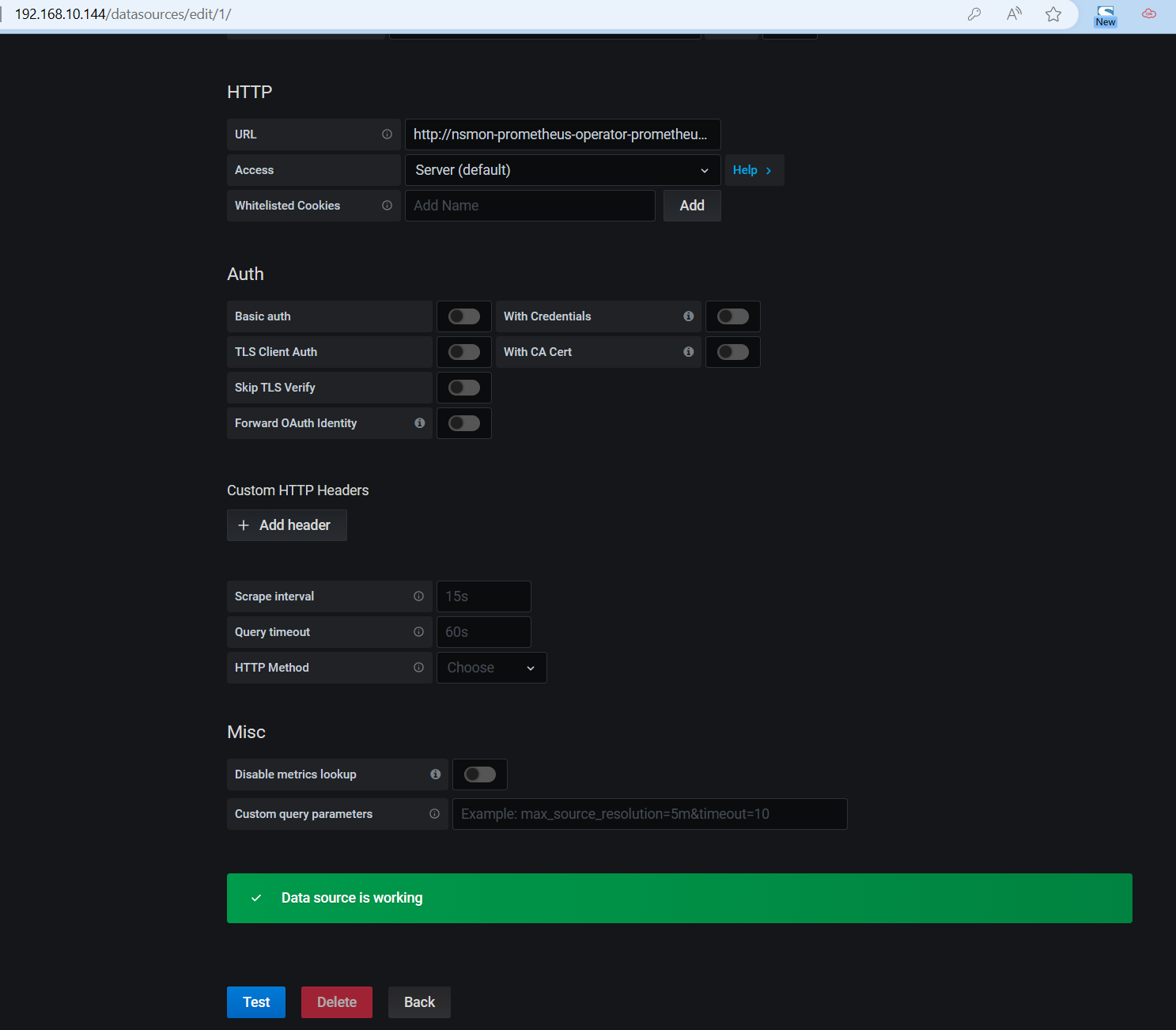

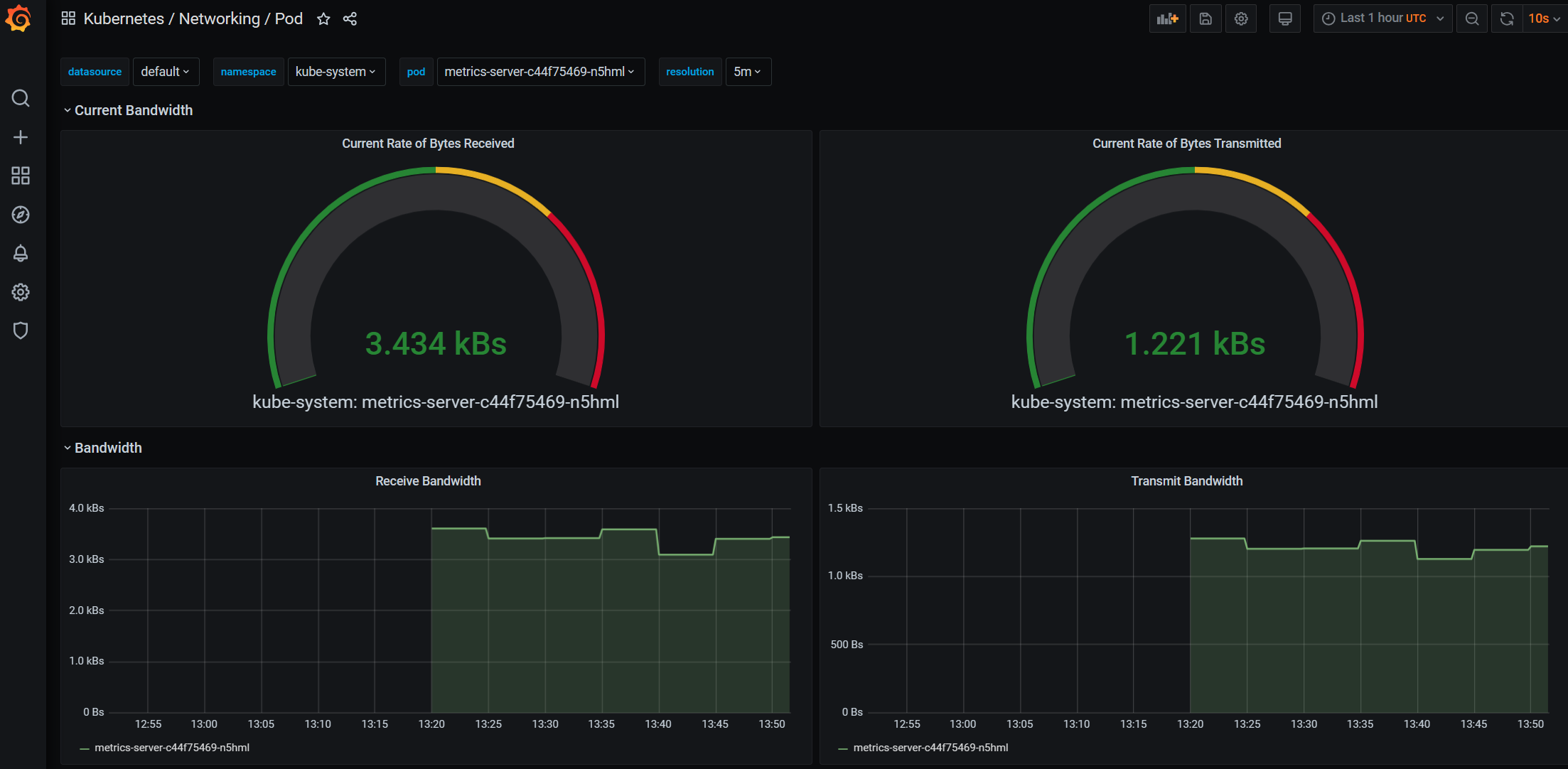

部署Prometheus Prometheus Operator是CoreOS基于prometheus开发的专门面向Kubernetes的一套监控方案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 # 创建测试用命名空间 [root@kmaster ~]# kubectl create ns nsmon namespace/nsmon created [root@kmaster ~]# kubens nsmon Context "kubernetes-admin@kubernetes" modified. Active namespace is "nsmon". [root@kmaster ~]# cd helm/ # 使用官方源安装Prometheus [root@kmaster helm]# helm search repo prometheus-operator NAME CHART VERSION APP VERSION DESCRIPTION stable/prometheus-operator 9.3.2 0.38.1 DEPRECATED Provides easy monitoring definitions... [root@kmaster helm]# helm install nsmon stable/prometheus-operator WARNING: This chart is deprecated …… # 安装完成 [root@kmaster helm]# kubectl get pods --no-headers alertmanager-nsmon-prometheus-operator-alertmanager-0 2/2 Running 0 4m47s nsmon-grafana-bdb4c489d-g5gkp 2/2 Running 0 8m13s nsmon-kube-state-metrics-6f8bd6c5f6-h8ds8 1/1 Running 0 8m13s nsmon-prometheus-node-exporter-7sw9c 1/1 Running 0 8m13s nsmon-prometheus-node-exporter-fwjmj 1/1 Running 0 8m13s nsmon-prometheus-node-exporter-g28vl 1/1 Running 0 8m13s nsmon-prometheus-operator-operator-574944bd6b-pf7p4 2/2 Running 0 8m13s prometheus-nsmon-prometheus-operator-prometheus-0 3/3 Running 1 4m37s # 映射grafana # 修改svc的服务类型 [root@kmaster svc]# kubectl edit svc -n nsmon nsmon-grafana apiVersion: v1 kind: Service metadata: annotations: meta.helm.sh/release-name: nsmon meta.helm.sh/release-namespace: nsmon metallb.universe.tf/ip-allocated-from-pool: ip-pool creationTimestamp: "2023-09-28T13:08:17Z" labels: app.kubernetes.io/instance: nsmon app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: grafana app.kubernetes.io/version: 7.0.3 helm.sh/chart: grafana-5.3.0 name: nsmon-grafana namespace: nsmon resourceVersion: "520405" selfLink: /api/v1/namespaces/nsmon/services/nsmon-grafana uid: 90a06d9d-d01f-45fd-9109-6b46a4924491 spec: clusterIP: 10.101.179.254 clusterIPs: - 10.101.179.254 externalTrafficPolicy: Cluster ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - name: service nodePort: 32668 port: 80 protocol: TCP targetPort: 3000 selector: app.kubernetes.io/instance: nsmon app.kubernetes.io/name: grafana sessionAffinity: None type: LoadBalancer # 修改GlusterIP为LoadBalancer [root@kmaster svc]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) nsmon-grafana LoadBalancer 10.101.179.254 192.168.10.144 80:32668/TCP # 查看用户名和密码 [root@kmaster svc]# kubectl get secrets nsmon-grafana -o yaml |head -5 apiVersion: v1 data: admin-password: cHJvbS1vcGVyYXRvcg== admin-user: YWRtaW4= ldap-toml: "" [root@kmaster svc]# echo -n "YWRtaW4="|base64 -d admin [root@kmaster svc]# echo -n "cHJvbS1vcGVyYXRvcg=="|base64 -d prom-operator # 用户名为admin,密码为prom-operator