Ansible管理平台AWX的部署

|Word Count:2.4k|Reading Time:12mins|Post Views:

概念

K3s

K3s 是一个轻量级的 Kubernetes 发行版,非常简单易用而且轻量。只需要一个简单的安装脚本即可把 K3s 安装到主机。

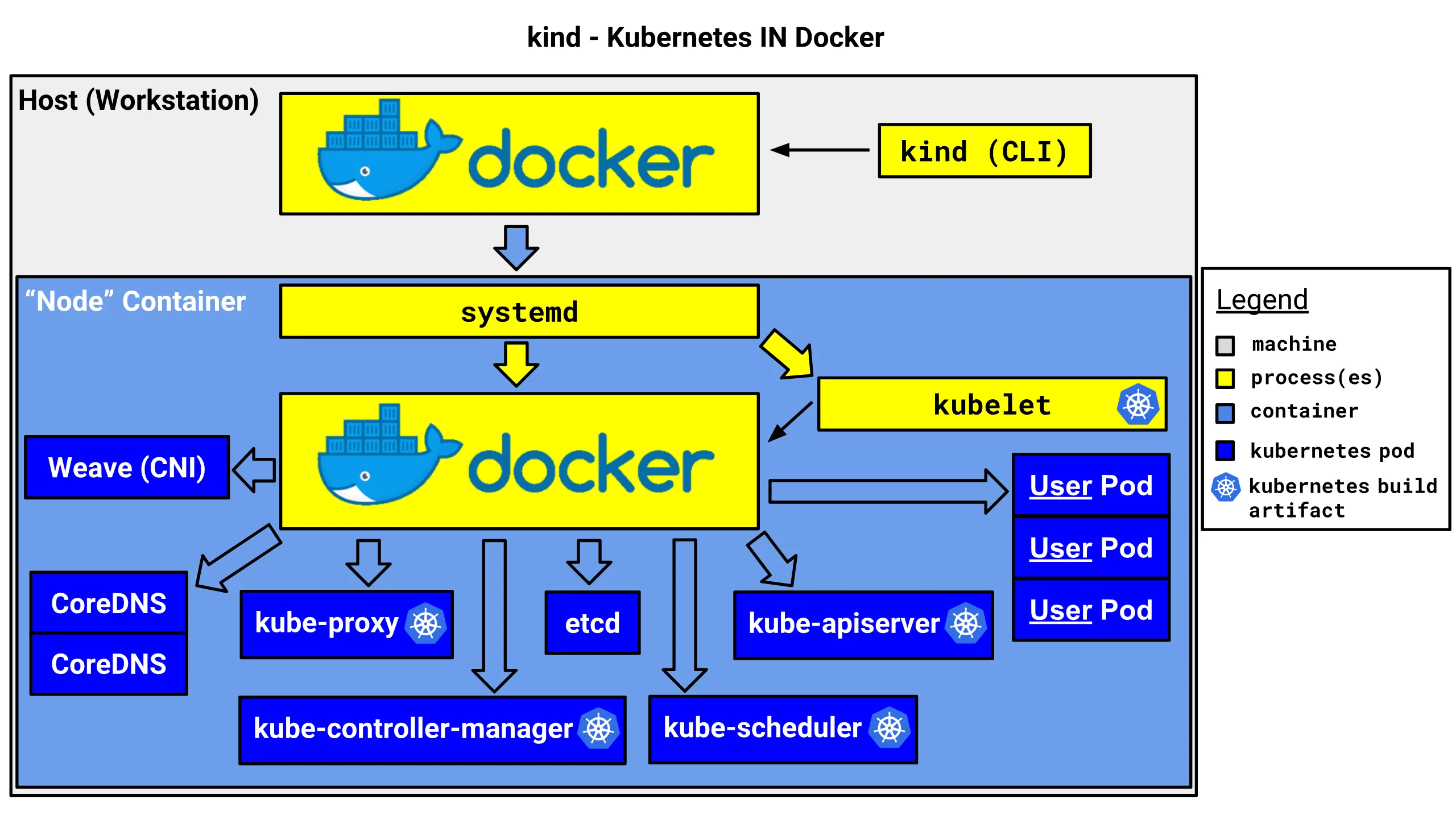

AWX

AWX是由Redhat运营的Ansible自动化运营平台Ansible Tower的上游项目,是将Ansible的管理和维护通过可视化的WEB GUI来进行展现。借用Ansible Tower的一张图来表示其具体架构:

相关的内容可见以下链接:

- Ansible

- AWX

由于Ansible AWX平台自v18之后,不在支持单机或者docker部署,要求必须部署到Kubernetes中,为了简化部署环境和步骤,现采用单节点的K3s来作为基础环境部署AWX。

部署

环境准备

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

| # 本次部署基于RockyLinux 9.2

# 主机配置为2core CPU/4GB MEM/40GB Disk

# 需要提前部署Dokcer和Kubectl

# 设置防火墙

firewall-cmd --set-default-zone=trusted

firewall-cmd --reload

# 关闭selinux

sed -i 's/enforceing/disabled' /etc/selinux/config

# 关闭SWAP

swapoff -a ; sed -i '/swap/d' /etc/fstab

# 修改内核加载模块

cat > /etc/modules-load.d/containerd.conf <<EOF

overlay

br_netfilter

EOF

cat >> /etc/sysctl.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# 卸载Podman

dnf remove -y podman*

dnf install -y yum-utils device-mapper-persistent-data lvm2

dnf install -y ipvsadm bridge-utils jq

dnf install -y bash-completion

# 添加软件源信息

dnf config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

# 更新并安装Docker-CE

dnf makecache

dnf install -y containerd.io python3-dnf-plugin-versionlock

dnf versionlock add containerd.io

# 创建服务启动配置文件

containerd config default > /etc/containerd/config.toml

# 添加加速器地址

# 将OOM限制由0改为-999,即遇到OOM情况时杀死容器而不是进程

# pause镜像地址使用lank8s.cn做代理

sed -e 's|oom_score = 0|oom_score = -999|g' -e 's|registry.k8s.io|lank8s.cn|g' -e 's|config_path = ""|config_path = "/etc/containerd/certs.d"|g' -i.bak /etc/containerd/config.toml

# 创建加速器配置文件

mkdir -pv /etc/containerd/certs.d/docker.io/

cat >> /etc/containerd/certs.d/docker.io/hosts.toml <<EOF

[host."https://37y8py0j.mirror.aliyuncs.com"]

EOF

mkdir -pv /etc/containerd/certs.d/registry.k8s.io/

cat >> /etc/containerd/certs.d/registry.k8s.io/hosts.toml <<EOF

[host."https://lank8s.cn"]

EOF

# 下载管理工具NerdCtl

wget https://github.com/containerd/nerdctl/releases/download/v1.6.2/nerdctl-1.6.2-linux-amd64.tar.gz

tar zxvf -C nerdctl-1.6.2-linux-amd64.tar.gz /usr/local/bin/

chmod +x /usr/local/bin/nerdctl

# 配置NerdCtl自动补全

echo "source <(nerdctl completion bash)" >> ~/.bashrc

# 重新加载并配置开机启动

systemctl daemon-reload

systemctl enable --now containerd

# 加载github的Hosts地址

# sh -c 'sed -i "/# GitHub520 Host Start/Q" /etc/hosts && curl https://raw.hellogithub.com/hosts >> /etc/hosts'

# 重启

sync

ldconfig

reboot

|

部署K3s

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

| # 使用官方社区提供的国内源进行部署

# 首先,安装 K3s 使用的是存储在阿里云对象存储上的 K3s 安装脚本,并且使用存储在国内 channel 去解析对应的 K3s 版本。

# 其次,通过 INSTALL_K3S_MIRROR=cn 环境变量来指定 K3s 的二进制文件从国内的阿里云对象存储上去拉取。

# 最后,通过 --system-default-registry 参数来指定 K3s 的系统镜像从国内的阿里云镜像仓库(registry.cn-hangzhou.aliyuncs.com) 去拉取

curl –sfL \

https://rancher-mirror.oss-cn-beijing.aliyuncs.com/k3s/k3s-install.sh | \

INSTALL_K3S_MIRROR=cn sh -s - \

--system-default-registry "registry.cn-hangzhou.aliyuncs.com"

# 检查节点

[root@Ansbile ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

ansbile Ready control-plane,master 13m v1.27.6+k3s1

# 检查性能属性

[root@Ansbile ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

ansbile 74m 3% 1475Mi 76%

# 检查版本信息

[root@Ansbile ~]# kubectl version --short

Client Version: v1.27.6+k3s1

Kustomize Version: v5.0.1

Server Version: v1.27.6+k3s1

# 配置K3S用的镜像代理

cat >> /etc/rancher/k3s/registries.yaml <<EOF

mirrors:

docker.io:

endpoint:

- "https://docker.mirrors.ustc.edu.cn"

EOF

cat >> /etc/rancher/k3s/registries.yaml <<EOF

mirrors:

gcr.io:

endpoint:

- "https://gcr.lank8s.cn"

EOF

cat >> /etc/rancher/k3s/registries.yaml <<EOF

mirrors:

registry.k8s.io:

endpoint:

- "https://registry.lank8s.cn"

EOF

systemctl restart k3s

# 部署helm

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

sh get_helm.sh

helm version

# 添加helm源

helm repo add stable https://charts.helm.sh/stable

helm repo update

# 配置helm的自动补全

helm completion bash > ~/.helmrc

echo "source ~/.helmrc" >> ~/.bashrc

# 配置kubectl的自动补全

echo "source <(kubectl completion bash)" >> ~/.bashrc

# 安装kubens,用于切换命名空间

curl -L https://github.com/ahmetb/kubectx/releases/download/v0.9.1/kubens -o /usr/local/bin/kubens

chmod +x /usr/local/bin/kubens

# 执行环境变量

source ~/.bashrc

# 指定kubeconfig配置文件

kubectl --kubeconfig /etc/rancher/k3s/k3s.yaml get pods --all-namespaces

helm --kubeconfig /etc/rancher/k3s/k3s.yaml ls --all-namespaces

|

测试用例

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

| [root@Ansbile ~]# kubectl create deploy whoami --image=traefik/whoami --replicas=2

deployment.apps/whoami created

# 测试LoadBalancer

[root@Ansbile ~]# kubectl expose deploy whoami --type=LoadBalancer --port=80 --external-ip 192.168.10.102

service/whoami exposed

[root@Ansbile ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 162m

whoami LoadBalancer 10.43.193.248 192.168.10.102 80:30573/TCP 31m

[root@Ansbile ~]# curl 192.168.10.102

Hostname: whoami-6f57d5d6b5-7qmp5

IP: 127.0.0.1

IP: ::1

IP: 10.42.0.38

IP: fe80::209c:10ff:fe71:6db8

RemoteAddr: 10.42.0.1:44357

GET / HTTP/1.1

Host: 192.168.10.102

User-Agent: curl/7.76.1

Accept: */*

# 测试NodePort

[root@Ansbile ~]# kubectl delete svc whoami

service "whoami" deleted

[root@Ansbile ~]# kubectl expose deploy whoami --type=NodePort --port=80

service/whoami exposed

[root@Ansbile ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 178m

whoami NodePort 10.43.75.150 <none> 80:30047/TCP 6s

[root@Ansbile ~]# curl 192.168.10.5:30047

Hostname: whoami-6f57d5d6b5-7qmp5

IP: 127.0.0.1

IP: ::1

IP: 10.42.0.38

IP: fe80::209c:10ff:fe71:6db8

RemoteAddr: 10.42.0.1:39611

GET / HTTP/1.1

Host: 192.168.10.5:30047

User-Agent: curl/7.76.1

Accept: */*

[root@Ansbile ~]# curl 192.168.10.5:30047

Hostname: whoami-6f57d5d6b5-pzgg8

IP: 127.0.0.1

IP: ::1

IP: 10.42.0.39

IP: fe80::dccd:bff:fe91:b7b7

RemoteAddr: 10.42.0.1:37584

GET / HTTP/1.1

Host: 192.168.10.5:30047

User-Agent: curl/7.76.1

Accept: */*

|

AWX安装

部署

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

| # 添加awx的helm库

[root@Ansible ~]# helm repo add awx-operator https://ansible.github.io/awx-operator/

"awx-operator" has been added to your repositories

# 刷新库

[root@Ansible ~]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "awx-operator" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

# 检索awx-operator

[root@Ansible ~]# helm search repo awx-operator

NAME CHART VERSION APP VERSION DESCRIPTION

awx-operator/awx-operator 2.7.0 2.7.0 A Helm chart for the AWX Operator

# 下载awx-operator的Chart

[root@Ansible ~]# helm pull awx-operator/awx-operator --version=2.7.0

[root@Ansible ~]# tar zxf awx-operator-2.7.0.tgz

[root@Ansible ~]# tree awx-operator

awx-operator

├── Chart.yaml

├── crds

│ ├── customresourcedefinition-awxbackups.awx.ansible.com.yaml

│ ├── customresourcedefinition-awxrestores.awx.ansible.com.yaml

│ └── customresourcedefinition-awxs.awx.ansible.com.yaml

├── README.md

├── templates

│ ├── awx-deploy.yaml

│ ├── clusterrole-awx-operator-metrics-reader.yaml

│ ├── clusterrole-awx-operator-proxy-role.yaml

│ ├── clusterrolebinding-awx-operator-proxy-rolebinding.yaml

│ ├── configmap-awx-operator-awx-manager-config.yaml

│ ├── deployment-awx-operator-controller-manager.yaml

│ ├── _helpers.tpl

│ ├── NOTES.txt

│ ├── postgres-config.yaml

│ ├── role-awx-operator-awx-manager-role.yaml

│ ├── role-awx-operator-leader-election-role.yaml

│ ├── rolebinding-awx-operator-awx-manager-rolebinding.yaml

│ ├── rolebinding-awx-operator-leader-election-rolebinding.yaml

│ ├── serviceaccount-awx-operator-controller-manager.yaml

│ └── service-awx-operator-controller-manager-metrics-service.yaml

└── values.yaml

2 directories, 21 files

# 获取部署容器镜像,需要修改下述yaml文件的image属性,添加 imagePullPolicy: IfNotPresent

[root@Ansible awx-operator]# grep image: templates/deployment-awx-operator-controller-manager.yaml

templates/deployment-awx-operator-controller-manager.yaml: image: gcr.io/kubebuilder/kube-rbac-proxy:v0.14.1

templates/deployment-awx-operator-controller-manager.yaml: image: quay.io/ansible/awx-operator:2.7.0

# 拉取镜像,需要使用代理镜像,或者在外网vps上下载再回传

$ docker pull quay.io/ansible/awx-operator:2.7.0

$ docker pull gcr.io/kubebuilder/kube-rbac-proxy:v0.14.1

$ docker save gcr.io/kubebuilder/kube-rbac-proxy quay.io/ansible/awx-operator > awx-operator.tar

[root@Ansible ~]# docker load -i awx-operator.tar

# 从本地安装

[root@Ansible ~]# export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

[root@Ansible awx-operator]# helm install -n awx --create-namespace my-awx-operator .

NAME: my-awx-operator

LAST DEPLOYED: Fri Oct 13 19:10:19 2023

NAMESPACE: awx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

AWX Operator installed with Helm Chart version 2.7.0

# 部署要求必须要有双核CPU,导致pending

[root@Ansible awx-operator]# kubectl get pods --namespace awx

NAME READY STATUS RESTARTS AGE

awx-operator-controller-manager-5644f9898c-x7xqh 0/2 Pending 0 40s

# 删除helm部署,增配之后再来

[root@Ansible awx-operator]# helm uninstall my-awx-operator --namespace awx

release "my-awx-operator" uninstalled

# 切换awx命名空间

[root@Ansbile ~]# kubens awx

Context "default" modified.

Active namespace is "awx".

# 再次部署,并检查运行结果

[root@Ansible awx-operator]# helm install --create-namespace my-awx-operator .

[root@ansible awx-operator]# kubectl get pods

NAME READY STATUS

awx-operator-controller-manager-5644f9898c-dfd82 2/2 Running

[root@ansible awx-operator]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

awx-operator-controller-manager-metrics-service ClusterIP 10.96.80.75 <none> 8443/TCP

# 创建动态卷供应(可选)

#[root@Kind AWX]# cat local-storage-class.yaml

#apiVersion: storage.k8s.io/v1

#kind: StorageClass

#metadata:

# name: local-path

# namespace: awx

#provisioner: rancher.io/local-path

#volumeBindingMode: WaitForFirstConsumer

#[root@Ansbile AWX]# kubectl create -f pvc.yaml

# 创建pvc

[root@Kind AWX]# cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: static-data-pvc

namespace: awx

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

[root@Ansbile AWX]# kubectl create -f pvc.yaml

# 创建AWX站点部署文件

[root@Ansbile AWX]# cat ansible-awx.yaml

---

apiVersion: awx.ansible.com/v1beta1

kind: AWX

metadata:

name: ansible-awx

namespace: awx

spec:

service_type: nodeport

projects_persistence: true

projects_storage_access_mode: ReadWriteOnce

web_extra_volume_mounts: |

- name: static-data

mountPath: /var/lib/projects

extra_volumes: |

- name: static-data

persistentVolumeClaim:

claimName: static-data-pvc

[root@Ansbile AWX]# kubectl create -f ansible-awx.yaml

# 部署完成,pod运行正常

[root@Ansbile AWX]# kubectl get pods -l "app.kubernetes.io/managed-by=awx-operator"

NAME READY STATUS RESTARTS AGE

ansible-awx-postgres-13-0 1/1 Running 0 6m55s

ansible-awx-task-684dbfd67c-vz62h 4/4 Running 0 4m42s

ansible-awx-web-6c64879999-vkbz6 3/3 Running 0 2m35s

# 部署期间自动创建pvc和pv

[root@Ansbile AWX]# kubectl get pvc

NAME STATUS VOLUME

postgres-13-ansible-awx-postgres-13-0 Bound pvc-bb2a8f4b-612d-4b32-9715-3a3e4c53a08a

static-data-pvc Bound pvc-1f430871-97ff-4828-a748-cad713268497

ansible-awx-projects-claim Bound pvc-a012ecc9-8e6d-4377-aee9-1803035a8210

# 查看部署完成的service

[root@Ansbile AWX]# kubectl get svc -l "app.kubernetes.io/managed-by=awx-operator"

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ansible-awx-postgres-13 ClusterIP None <none> 5432/TCP 10m

ansible-awx-service NodePort 10.43.114.158 <none> 80:30769/TCP 7m58s

# 获取默认admin用户的密码

[root@Ansbile ~]# kubectl get secret ansible-awx-admin-password -o go-template='{{range $k,$v := .data}}{{printf "%s: " $k}}{{if not $v}}{{$v}}{{else}}{{$v | base64decode}}{{end}}{{"\n"}}{{end}}'

password: 7ryIBAw5RBUzhw0K2BvHctuncIcID8dM

|

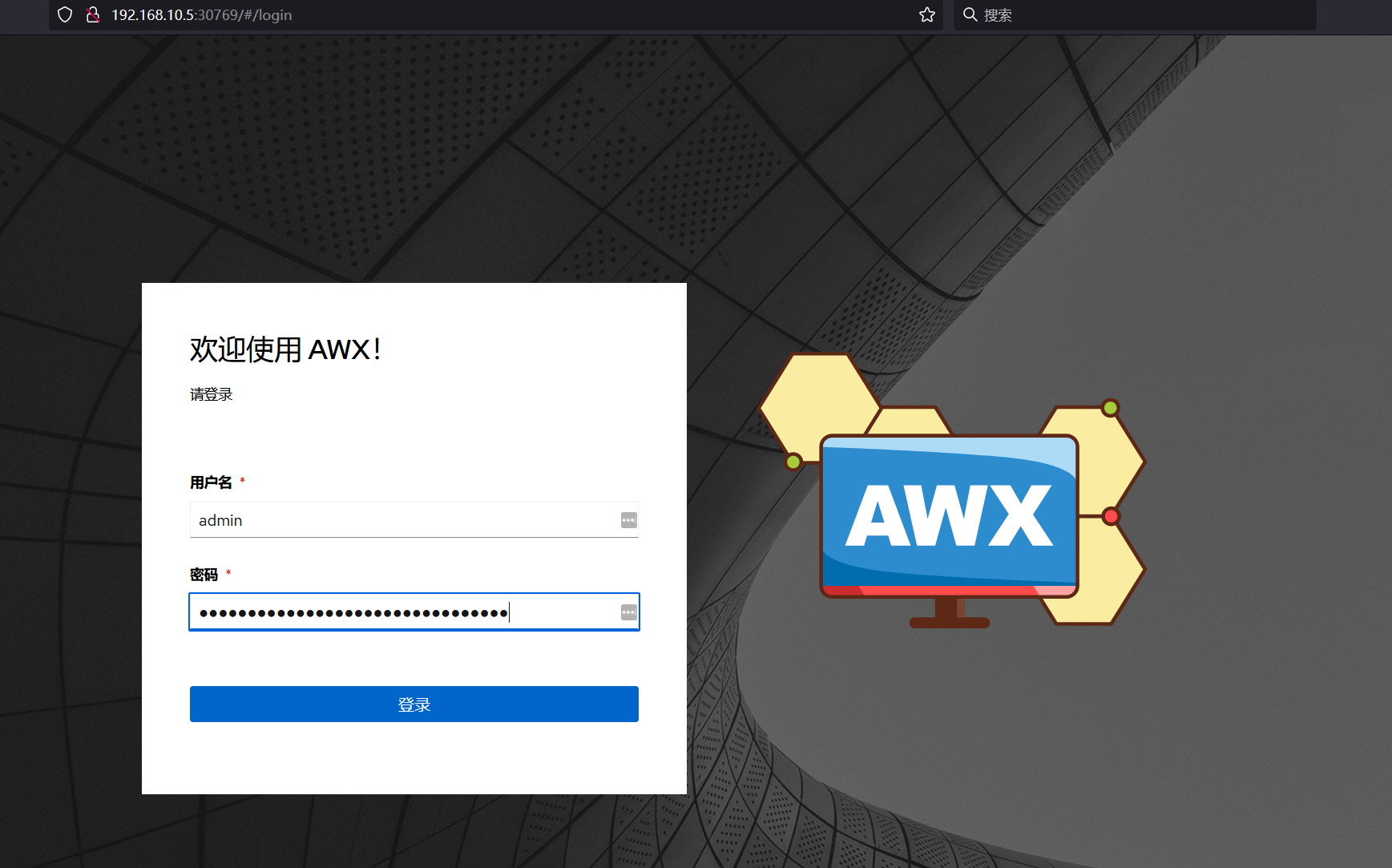

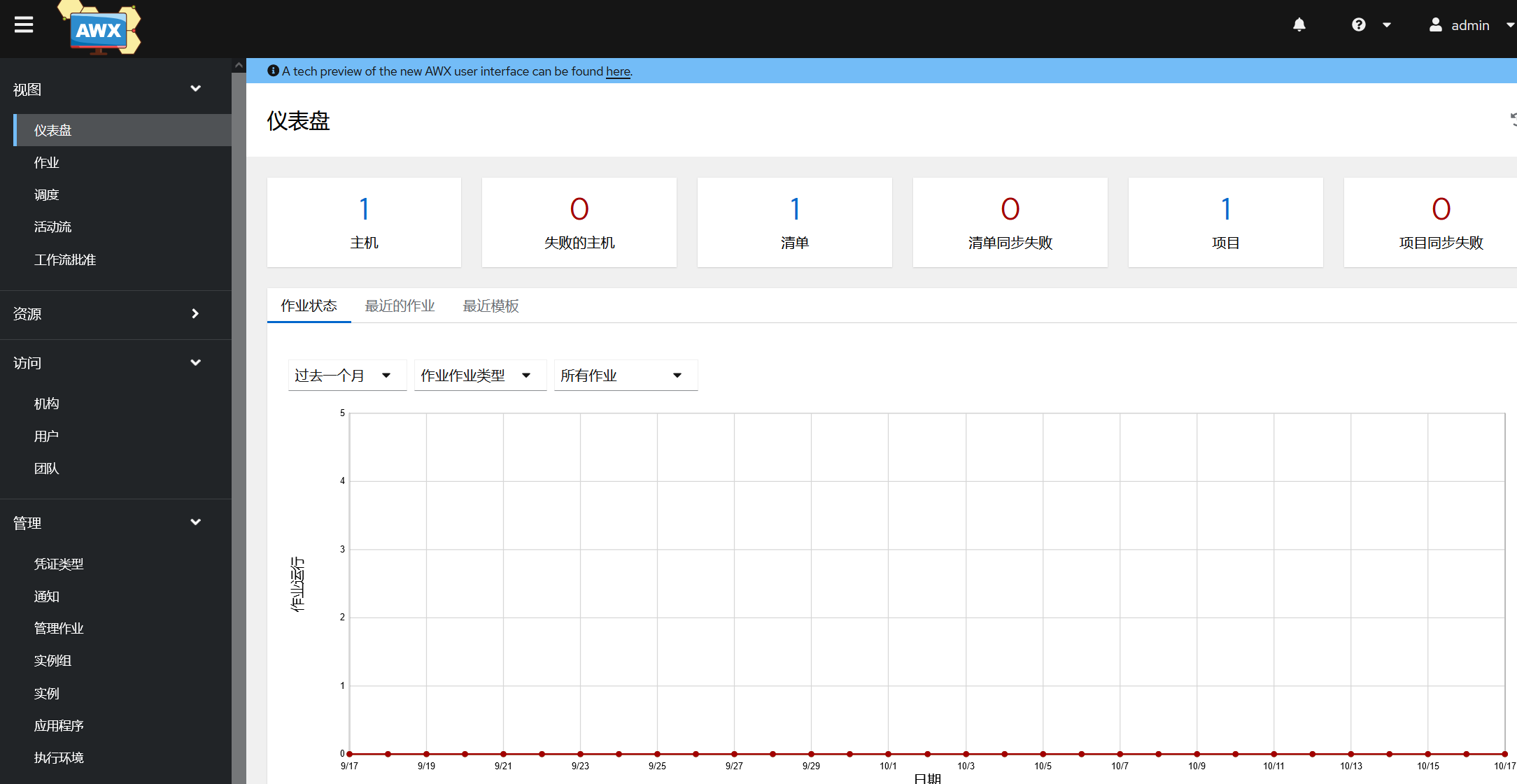

访问

- 登陆portal页面

- 查看Dashboard

- 遗留项

- 使用域名访问;

- 加载SSL证书

参考

- kind:Kubernetes in Docker,单机运行 Kubernetes 群集的最佳方案

- 如何在 Kubernetes 集群上安装 Ansible AWX

- 使用 Kind 在离线环境创建 K8S 集群

- AWX-operator官方文档